AI is transforming industries—from healthcare and finance to gaming and content creation. But to truly unlock AI’s potential, you need the right hardware. A powerful GPU accelerates deep learning, speeds up model training, and handles massive datasets with ease. Whether you’re an AI researcher building the next big language model or a hobbyist running Stable Diffusion on your desktop, choosing the right graphics card makes all the difference.

In this guide, we break down the best GPUs for AI in 2025. We’ve ranked them based on performance, memory, features, and price-to-performance ratio. We’ll also discuss why NVIDIA continues to dominate the AI GPU market and how its new Blackwell architecture is shaking up the landscape.

1. Nvidia H100 — The Pinnacle of AI Performance

Why It’s Great:

The Nvidia H100 is hands-down the most powerful AI GPU on the planet right now. Built for data centers and enterprise AI workloads, it’s engineered for training massive models like GPT-4 and beyond, while also accelerating inference at unprecedented speeds.

Key Features:

- Memory: 80GB HBM3 with up to 3 TB/s bandwidth

- CUDA Cores: 16,896

- Tensor Cores: 4th Gen, optimized for FP8/FP16/TF32

- AI Performance: Up to 4x faster training performance than the A100

- Architecture: Hopper, with NVLink and PCIe Gen 5 support

- Power Requirements: 700W TDP

In-depth Review:

If you’re a company training large language models (LLMs), computer vision systems, or running inference at scale, the H100 is your go-to. It supports newer precisions like FP8 and delivers up to 60 TFLOPs of FP64 compute. Its cost ($30,000+) and power demands make it impractical for home users, but it’s the backbone of many AI supercomputers and cloud services.

2. Nvidia RTX 5090 — The New Prosumer King for AI

Why It’s Great:

The RTX 5090 is Nvidia’s newest flagship GPU for enthusiasts and professionals who need serious AI power on a desktop. Built on the next-gen Blackwell architecture, it blows past the 4090 and even encroaches on workstation territory.

Key Features:

- Memory: 32GB GDDR7 with 1,792 GB/s bandwidth

- CUDA Cores: 24,576

- Tensor Cores: 5th Gen with FP8 acceleration

- AI Performance: ~2x faster in AI workloads vs. RTX 4090

- Architecture: Blackwell (B100-based improvements)

- Power Consumption: 600W TDP

In-depth Review:

With double the memory bandwidth of the RTX 4090 and next-gen Tensor Cores, the RTX 5090 is ideal for training and running large AI models on a desktop workstation. Its 32GB VRAM allows for working with larger datasets and models like LLaMA 2 and Stable Diffusion XL at higher resolutions. The 5090 is already a favorite for AI researchers who don’t have access to datacenter-grade hardware.

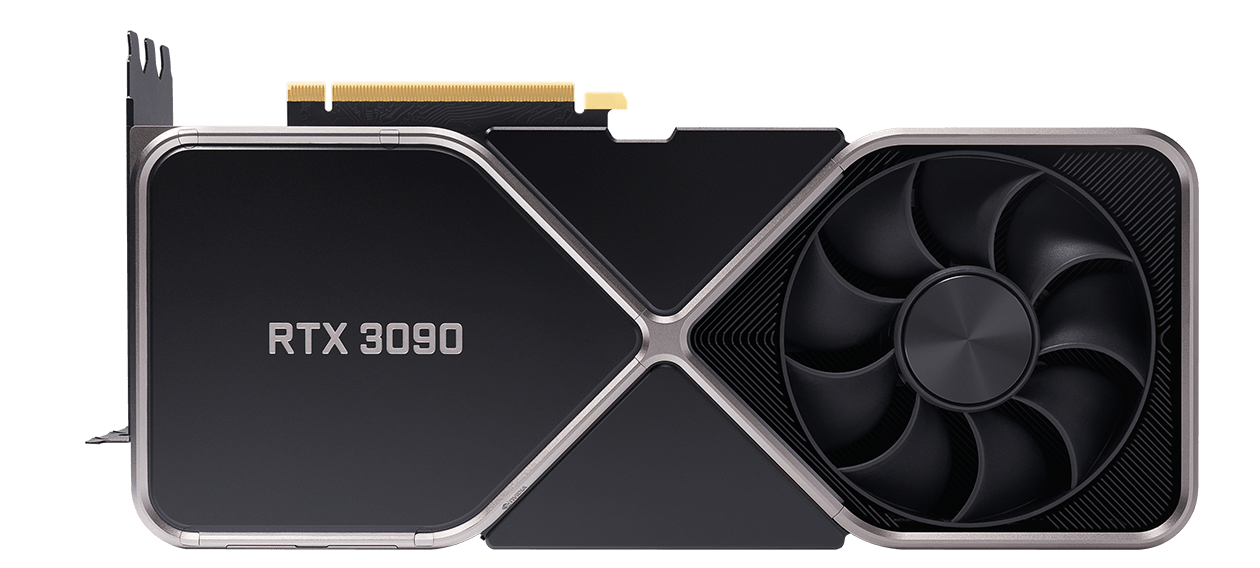

3. Nvidia RTX 4090 — AI Powerhouse for Developers and Creators

Why It’s Great:

The RTX 4090 was a game-changer when it launched, and it still holds its ground as one of the best AI GPUs for developers, researchers, and creators. It strikes a balance between price and performance for users who need serious AI horsepower.

Key Features:

- Memory: 24GB GDDR6X with 1,008 GB/s bandwidth

- CUDA Cores: 16,384

- Tensor Cores: 4th Gen with FP8/FP16 support

- AI Performance: Supports large models and high-speed inference

- Architecture: Ada Lovelace

- Power Draw: 450W TDP

In-depth Review:

From fine-tuning LLMs to running complex vision models, the 4090 delivers excellent performance without the sky-high costs of datacenter GPUs. Its 24GB VRAM is sufficient for most AI workloads, including Stable Diffusion, DeepFaceLab, and custom LLMs with quantization. With FP8 support, it runs modern machine learning frameworks efficiently.

4. Nvidia RTX 4080 SUPER — Balanced Performance and Efficiency

Why It’s Great:

The RTX 4080 SUPER is a solid middle-ground card for AI developers who need good performance but are budget-conscious. It’s ideal for running smaller models or using AI-enhanced creative tools.

Key Features:

- Memory: 16GB GDDR6X with 736 GB/s bandwidth

- CUDA Cores: 10,240

- Tensor Cores: 4th Gen with FP8 support

- Architecture: Ada Lovelace

- Power Draw: 320W TDP

In-depth Review:

While the 16GB VRAM limits large-scale model training, the 4080 SUPER is great for AI inference, content creation tools like Adobe’s Firefly AI, and AI video upscaling. It’s a practical choice for AI-powered workflows without the heavy costs or power draw of higher-end models.

5. Nvidia RTX 4070 Ti SUPER — Affordable Entry into AI

Why It’s Great:

For beginners and hobbyists, the RTX 4070 Ti SUPER is a great starting point for AI development. It brings FP8 support and decent VRAM at a relatively affordable price.

Key Features:

- Memory: 16GB GDDR6X with 672 GB/s bandwidth

- CUDA Cores: 8,448

- Tensor Cores: 4th Gen

- Architecture: Ada Lovelace

- Power Draw: 285W TDP

In-depth Review:

While you won’t be training large LLMs on a 4070 Ti SUPER, it’s perfect for running inference tasks, AI art generation (Stable Diffusion), and dabbling in AI model development. It’s also the most power-efficient option on the list, which makes it a good fit for smaller workstations or multi-GPU setups.

6. Nvidia RTX 3090 — Budget-Friendly Deep Learning Workhorse

Why It’s Great:

The RTX 3090 might be a previous-gen card, but it’s still a capable AI workhorse, especially for those on a budget.

Key Features:

- Memory: 24GB GDDR6X with 936 GB/s bandwidth

- CUDA Cores: 10,496

- Tensor Cores: 3rd Gen

- Architecture: Ampere

- Power Draw: 350W TDP

In-depth Review:

You can still find the 3090 at attractive prices on the used market, and its 24GB VRAM makes it suitable for running large AI models. However, it lacks FP8 support and is less power-efficient compared to newer GPUs. Still, for under $1,000, it’s a solid choice for deep learning beginners and hobbyists.

How to Choose the Best AI GPU for Your Needs

- VRAM Is Critical

- At least 16GB for training models beyond toy datasets.

- 24GB+ if you’re training LLMs, GANs, or high-resolution diffusion models.

- Tensor Core Generation

- FP8 acceleration (Ada Lovelace or Blackwell) dramatically speeds up AI tasks.

- 3rd-gen Tensor Cores (Ampere) are still usable but less efficient.

- Budget vs. Performance

- Under $1,000: RTX 3090 or 4070 Ti SUPER

- $1,500–$2,000: RTX 4090

- $2,000+: RTX 5090 or H100 if you need enterprise power.

Best GPUs for AI in 2025 — Summary Table

| Rank | GPU | VRAM | Tensor Cores | Architecture | AI Strengths | Price Range |

|---|---|---|---|---|---|---|

| 1 | Nvidia H100 | 80GB HBM3 | 4th Gen | Hopper | Enterprise LLM training, cloud AI | $30,000+ |

| 2 | Nvidia RTX 5090 | 32GB GDDR7 | 5th Gen | Blackwell | Next-gen AI workloads, prosumer king | $1,999+ |

| 3 | Nvidia RTX 4090 | 24GB GDDR6X | 4th Gen | Ada Lovelace | AI training and inference powerhouse | $1,500–$2,000 |

| 4 | Nvidia RTX 4080 SUPER | 16GB GDDR6X | 4th Gen | Ada Lovelace | Efficient AI inference and creative tools | $1,000–$1,200 |

| 5 | Nvidia RTX 4070 Ti SUPER | 16GB GDDR6X | 4th Gen | Ada Lovelace | Entry-level AI, AI-enhanced workflows | $800–$1,000 |

| 6 | Nvidia RTX 3090 | 24GB GDDR6X | 3rd Gen | Ampere | Budget AI workhorse, deep learning | $800–$1,000 (used) |

Key Takeaways

- NVIDIA GPUs like the RTX 4090 offer the best balance of performance and price for most AI applications.

- Look for at least 12GB of VRAM when buying a GPU for deep learning projects.

- Professional-grade GPUs provide better performance for serious AI research while consumer cards work well for beginners.

Understanding GPU Architecture

AI is only getting more demanding, and the GPU you choose today will define what you’re capable of tomorrow. If you’re serious about AI research or development, investing in GPUs with ample VRAM, the latest Tensor cores, and power efficiency will pay off in faster training times and more ambitious projects. The RTX 5090 is already rewriting the rules for prosumers, while the H100 powers the AI cloud revolution.

GPU architecture forms the foundation of AI processing power. The components inside these specialized chips work together to handle the complex calculations needed for machine learning tasks at speeds far beyond what regular CPUs can achieve.

CUDA Cores and Tensor Cores

CUDA cores are the basic processing units in NVIDIA GPUs that handle general computing tasks. Think of them as small workers that process information in parallel. While a typical CPU might have 4-16 cores, modern GPUs can contain thousands of CUDA cores working simultaneously.

Tensor cores are specialized units designed specifically for AI workloads. These cores accelerate matrix operations that are essential for deep learning. For example, when training a large language model, tensor cores can perform matrix multiplications up to 10x faster than CUDA cores alone.

The RTX 4090, a top consumer GPU for AI, features 16,384 CUDA cores and 512 tensor cores. This combination allows it to handle both general computing and specialized AI tasks efficiently.

Tensor Core GPU and Hopper Architecture

NVIDIA’s Hopper architecture represents a major advancement in GPU design for AI processing. The H100, built on this architecture, is considered the gold standard for conversational AI and large-scale training.

Hopper introduces fourth-generation tensor cores with significant improvements in computational efficiency. These GPUs can process FP8 (8-bit floating point) calculations, which allows for faster training while maintaining accuracy.

A key innovation in Hopper is the Transformer Engine, designed specifically to accelerate large language models. This specialized hardware can dynamically adjust precision during calculations, optimizing both performance and accuracy.

The H100’s architecture also features improved interconnect technology, allowing multiple GPUs to work together seamlessly on massive AI workloads with reduced communication bottlenecks.

GDDR6 and VRAM

VRAM (Video Random Access Memory) is critical for AI workloads as it stores the model parameters and data being processed. The amount of VRAM directly affects what size models a GPU can handle without running out of memory.

GDDR6 is the current standard memory technology in most AI-capable GPUs. It offers high bandwidth (up to 1TB/s in some cards) and relatively low latency, making it ideal for the parallel processing nature of AI tasks.

Memory bandwidth matters as much as capacity for AI work. When running models like Stable Diffusion, higher bandwidth allows for faster data transfer between the GPU cores and memory, reducing bottlenecks.

For reference, the RTX 4090 includes 24GB of GDDR6X memory, while professional options like the A100 offer up to 80GB, enabling work with much larger models and batch sizes.

NVIDIA L4 and Larger LLM Support

The NVIDIA L4 GPU represents a balanced approach to AI inference, offering good performance while consuming less power than high-end options. This makes it popular for deploying large language models in production environments.

L4 GPUs include hardware optimizations specifically for transformer-based architectures, which power most modern LLMs. This enables efficient processing of attention mechanisms central to these models.

Memory efficiency features in newer GPUs like the L4 include techniques such as sparsity support, which identifies and skips unnecessary calculations in neural networks. This can increase effective memory capacity by 2-3x when running large models.

For organizations running multiple AI services, the L4’s efficiency means more inference capacity per server, reducing both hardware costs and energy consumption while maintaining the ability to run sophisticated models.

AI-Oriented GPU Features

Modern GPUs offer specialized features that make them powerful tools for AI tasks. These capabilities go beyond raw processing power to include specialized hardware acceleration, memory management, and software optimizations.

Ray Tracing and DLSS

Ray tracing technology has evolved beyond gaming to benefit AI applications. NVIDIA’s RT cores accelerate AI simulations by providing realistic lighting calculations important for computer vision training. This hardware can process light paths and reflections much faster than traditional approaches.

Deep Learning Super Sampling (DLSS) uses AI to upscale lower-resolution images to higher resolutions with minimal quality loss. This feature is helpful for researchers working with visual data who need to:

- Enhance image resolution without extensive computing resources

- Speed up training on visual datasets

- Improve efficiency in computer vision tasks

AI developers can leverage DLSS to work with lighter models while still producing high-quality visual outputs. The latest DLSS 3.5 with Ray Reconstruction creates even more realistic images for vision-based AI training.

NVLink and Multi-GPU Setups

NVLink provides high-bandwidth connections between GPUs, enabling them to work together efficiently on complex AI tasks. The technology allows for:

- Data sharing speeds up to 900 GB/s, much faster than PCIe connections

- Unified memory access across multiple GPUs

- Seamless workload distribution

For large language models and complex neural networks, multi-GPU setups are essential. The RTX 4090 supports NVLink, while professional cards like the A100 can be connected in groups of up to 8 GPUs using NVSwitch technology.

Multi-GPU setups allow researchers to scale their model training beyond what a single card can handle. This parallelization capability is crucial for training advanced models like those used in modern generative AI systems.

GenAI and Advanced Features

The latest GPUs come with specialized features for generative AI. NVIDIA’s Tensor cores provide tremendous acceleration for matrix operations common in transformer models. The RTX 4000 series includes:

- Fourth-generation Tensor cores for faster AI computations

- Multi-frame generation for creating sequential outputs

- Hardware acceleration for attention mechanisms

Specialized memory architectures also benefit GenAI tasks. HBM3e memory in high-end GPUs offers bandwidth up to 5TB/s, critical for large model inference.

Advanced features like CUDA-X AI libraries provide optimized code paths for common AI operations. These pre-optimized routines help developers build more efficient models without low-level optimization work. Many GPUs now include dedicated hardware for specific AI operations, making them more energy-efficient for inference tasks.

GPUs in Deep Learning and AI Training

Graphics processing units (GPUs) have become essential hardware for AI tasks. They handle complex calculations in parallel, making them perfect for the math-heavy workloads of modern AI systems.

GPUs for Large Language Models

Large Language Models (LLMs) need enormous computing power to train and run effectively. NVIDIA dominates this space with their specialized hardware.

The NVIDIA A100 stands out as the top choice for professional AI tasks and LLM training. It offers exceptional processing power and dedicated Tensor Cores that speed up deep learning tasks dramatically.

For those with budget constraints, the RTX 3060 or 3060 Ti provide good value. These consumer-grade GPUs offer decent performance for smaller models or fine-tuning pre-trained LLMs.

Memory is crucial when working with LLMs. Experts recommend at least 12GB of VRAM, with 32GB of system RAM to support it. The RTX 4090 has become popular for its 24GB of memory, allowing it to handle larger model segments.

Training Accelerated Computing

Training AI models requires specialized hardware setups focused on speed and efficiency.

NVIDIA GPUs remain the best supported option for machine learning libraries. They integrate seamlessly with popular frameworks like PyTorch and TensorFlow, which makes development faster and more reliable.

For professional setups, the NVIDIA A40 and RTX A6000 offer excellent performance for training complex models. These cards balance raw power with reasonable energy consumption.

Multi-GPU setups can dramatically cut training time. Using technologies like NVIDIA’s NVLink allows GPUs to share data rapidly, creating powerful training clusters that can tackle even the most demanding AI projects.

Inference and Real-time Processing

Inference—running trained models to get results—often has different hardware needs than training.

For real-time AI applications, lower-end GPUs can be sufficient if they have enough VRAM. The RTX 3060 with 12GB of memory offers good value for inference tasks without breaking the bank.

Speed is critical for applications like computer vision or real-time text generation. Modern GPUs include specialized hardware accelerators specifically designed to speed up AI inference tasks.

Power efficiency becomes more important for inference servers that run 24/7. The latest generation of GPUs offers better performance-per-watt ratios, reducing operating costs while maintaining speed.

Cloud-based inference solutions are also popular, allowing organizations to scale their AI deployment without massive hardware investments.

The Best GPUs for Different AI Uses

Selecting the right GPU for AI applications depends on your specific needs and budget. Different AI workloads require different hardware capabilities, from entry-level content creation to massive enterprise deployments.

Consumer GPUs for Content Creation and Gaming

The NVIDIA RTX 4090 stands out as the top consumer GPU for AI tasks. It offers excellent performance for both gaming and AI workloads like image generation or video editing. For those on a tighter budget, the RTX 3060 or 3060 Ti provide good value while still handling basic AI tasks effectively.

AMD’s offerings like the Radeon RX 7900 XTX also perform well but lack the same level of software support for AI frameworks that NVIDIA enjoys.

When choosing a consumer GPU for AI, consider VRAM capacity as a priority. Modern AI models are memory-hungry, and 8GB is now considered minimal for serious work. The RTX 4090 with 24GB VRAM gives much more headroom for larger models.

For paired gaming and AI use, the RTX 4070 hits a sweet spot of performance and price. It handles gaming at high settings while still providing enough power for hobby-level AI projects.

Professional GPUs for Scientific Simulations

Scientific simulations demand precision and sustained computational power. The NVIDIA A100 dominates this space with its exceptional tensor core performance and massive 80GB memory option. It excels in complex simulations for physics, chemistry, and medical research.

The A6000, while less powerful than the A100, offers 48GB VRAM at a more accessible price point for research labs and universities. It’s particularly strong for scientific visualization workloads.

Key features for scientific GPUs include:

- Error-correcting code (ECC) memory for research reliability

- Double-precision floating-point performance for scientific accuracy

- Larger VRAM for handling complex datasets

For smaller research teams, the RTX A5000 provides a good balance of performance and cost. Many scientific applications benefit from multiple lower-tier cards running in parallel rather than a single high-end GPU.

Enterprise-Level Solution for AI Accelerators

Enterprise AI deployments typically require multi-GPU systems or specialized accelerators. NVIDIA’s DGX systems combine multiple A100 GPUs with optimized networking for large-scale AI training. These systems handle massive datasets and complex models that would overwhelm consumer hardware.

Google’s TPU (Tensor Processing Unit) offers an alternative to traditional GPUs for certain workloads. Available through Google Cloud, TPUs excel at specific deep learning tasks.

Enterprise solutions prioritize:

- Scalability across multiple units

- Power efficiency for data center deployment

- Reliability for 24/7 operation

- Advanced cooling solutions

The price-to-performance ratio looks different at this scale. Total cost of ownership, including power consumption and cooling requirements, becomes more important than the initial hardware cost.

AI Accelerators for Specific Applications

Specialized AI accelerators target specific use cases with optimized hardware. Intel’s Habana Gaudi accelerators focus on training efficiency, while their Nervana Neural Network Processors target inference workloads.

Edge AI applications require efficient, low-power solutions. NVIDIA’s Jetson platform brings AI capabilities to devices with strict power and size constraints. The Jetson Xavier NX delivers impressive performance for computer vision and speech recognition in compact devices.

Mobile devices use even more specialized hardware. Apple’s Neural Engine in their M-series chips allows AI features like face recognition without draining battery life.

For custom applications, FPGAs (Field Programmable Gate Arrays) offer flexibility. They can be programmed for specific AI algorithms, potentially offering better performance per watt than general-purpose GPUs for certain tasks.

Comparison of Top GPU Models for AI

GPU selection is critical for AI workloads as different models offer varying performance, memory capacity, and cost efficiency. The right choice depends on specific requirements for training or inference tasks.

NVIDIA RTX 50-Series

The upcoming RTX 50-Series represents NVIDIA’s next generation of consumer GPUs with enhanced AI capabilities. Expected to launch in late 2025, these cards will likely build upon the architecture of the 40-Series with significant improvements in tensor cores and memory bandwidth.

Early benchmarks suggest the flagship RTX 5090 may deliver up to 30% better performance for AI workloads compared to the RTX 4090. Key improvements include:

- Larger VRAM capacity (expected 32GB or higher)

- More efficient power consumption

- Enhanced tensor cores optimized for transformer models

- Better integration with NVIDIA’s AI software stack

For professionals who can wait, the 50-Series promises to be a substantial upgrade for local AI model hosting and development work.

AMD Radeon Solutions

AMD has made significant strides in the AI GPU market with their RDNA 4 architecture. The Radeon RX 7900 XTX currently serves as AMD’s flagship offering for AI applications.

AMD’s advantages include:

- More competitive pricing than equivalent NVIDIA options

- Strong raw compute performance

- Improving software support for major AI frameworks

However, AMD GPUs still face challenges with AI workload compatibility. Their ROCm platform continues to develop but lacks the mature ecosystem of CUDA.

For specific workloads where AMD compatibility is confirmed, cards like the Radeon RX 7900 XTX offer excellent value. AMD’s next-generation cards may narrow the gap with NVIDIA further as software support improves.

Legacy GPUs and Their Current Relevance

Older GPU models remain surprisingly relevant for certain AI tasks. The RTX 3080, RTX 3090, and even the GTX 1080 Ti can still handle many AI workloads effectively.

RTX 30-Series value proposition:

- RTX 3090 (24GB VRAM) remains capable of running medium-sized language models

- RTX 3080 offers strong performance per dollar for smaller models

- Much more affordable on secondary markets

The RTX 20-Series launched NVIDIA’s tensor cores but falls behind in efficiency. For basic inference tasks or learning AI development, these older cards provide an entry point without the premium price of newer models.

Many professionals now use multiple legacy GPUs in parallel to achieve performance similar to newer single cards. Two RTX 3090 cards can match or exceed a single RTX 4090 for some workloads at comparable cost.

Evaluating GPU Performance

When selecting a GPU for AI workloads, understanding performance metrics is crucial. The right evaluation techniques help identify which graphics cards will deliver the best results for specific AI applications.

Benchmarking GPUs With Standard Workloads

Standard benchmarks provide a consistent way to compare different GPUs. Tools like MLPerf and TensorFlow benchmarks measure how quickly a GPU can train common AI models. These tests show real-world performance rather than just theoretical capabilities.

NVIDIA’s GPUs typically score well in these tests due to their CUDA ecosystem. For example, the RTX 4090 often outperforms older Titan models despite the price difference. AMD GPUs are improving but still lag behind NVIDIA in most AI tasks.

When reading benchmark results, pay attention to:

- Training time for standard models

- Inference speed for real-time applications

- Performance per watt for efficiency concerns

Benchmark scores should be compared within the same test suite, as different benchmarks use different methodologies.

AI Processing and Multi-Tasking Efficiency

Modern AI workloads often require running multiple processes simultaneously. A GPU’s ability to handle these concurrent tasks significantly impacts overall performance.

Core count and clock speed matter, but driver efficiency and architecture are equally important. NVIDIA’s latest architectures include specialized Tensor Cores that dramatically speed up matrix operations common in AI work.

GPU utilization is another key metric. A card running at 100% utilization with poor cooling will throttle its performance. Effective cooling solutions keep performance consistent during long training sessions.

Multi-GPU setups can offer linear scaling for some workloads, but this depends on:

- The interconnect speed between cards

- How well the software parallelizes the workload

- Memory management across multiple GPUs

Memory Bandwidth and Data Throughput

Memory limitations often become bottlenecks in AI performance before raw processing power does. Three crucial aspects to consider are:

VRAM capacity: Larger models require more memory. Training a model that doesn’t fit in VRAM forces slower system RAM use or model splitting.

Memory bandwidth: How quickly data moves between GPU memory and processing cores affects overall speed. Higher bandwidth (measured in GB/s) means the processing cores spend less time waiting for data.

Memory type: GDDR6X offers higher bandwidth than GDDR6, while HBM2E (found in professional cards) provides the highest throughput but at greater cost.

For large language models and image generation, memory requirements grow significantly. Many cutting-edge models need 24GB+ of VRAM to run efficiently without compromises.

Rendering and Visualization with GPUs

GPUs excel at handling complex visual data processing tasks beyond AI training. Their parallel processing capabilities make them perfect for creating and manipulating images and videos quickly.

Real-Time Graphics and Game Development

Modern games and real-time applications demand powerful GPUs to render detailed environments instantly. The NVIDIA RTX series, particularly the 4090, offers exceptional performance for developers and gamers alike. These cards include specialized cores for ray tracing, allowing for realistic lighting and shadows.

Game engines like Unreal and Unity benefit from GPU acceleration. Developers can see their changes in real-time rather than waiting for lengthy renders.

For professionals, NVIDIA’s RTX A-series provides stability and certified drivers for development tools. These cards are built to handle continuous workloads without throttling.

Entry-level options like the RTX 4060Ti offer good performance for indie developers. The 16GB VRAM variant is especially useful for working with complex game assets and textures.

High-Resolution Image Processing

AI image generation and photo editing rely heavily on GPU power. The RTX 4090 and 4080 shine here with their large VRAM capacities and processing cores.

Adobe Photoshop and similar programs use GPU acceleration for filters and transformations. Working with 4K or 8K images becomes much smoother with a capable graphics card.

For professionals processing batches of high-resolution images, dual-GPU setups with cards like the 3090 can offer excellent price-to-performance ratios. These setups allow for multitasking across resource-intensive applications.

Budget-conscious users can consider the RTX 4060Ti with 16GB VRAM. This card provides enough memory for most image processing tasks at a lower price point.

Video Editing and Encoding

Video professionals benefit greatly from powerful GPUs. The RTX 4080 and 4090 excel at timeline scrubbing, effects rendering, and encoding.

NVIDIA’s NVENC encoder on these cards speeds up export times dramatically. A project that might take hours on a CPU can finish in minutes with proper GPU acceleration.

Programs like DaVinci Resolve and Adobe Premiere Pro rely heavily on GPU power for real-time playback of edited footage. This is especially true when working with 4K or 8K video.

For studios handling multiple video projects, workstations with RTX A-series GPUs provide reliability and certified drivers for professional applications. These cards are designed for continuous operation under heavy loads.

The RTX 4060Ti offers a good entry point for video editors on a budget, though more demanding workflows may require stepping up to more powerful options.

Future of GPUs in AI

GPU technology continues to evolve rapidly to meet the growing demands of artificial intelligence applications. The next generation of graphics processors will focus on specialized architectures, improved efficiency, and sustainable computing practices.

Advancements in AI Accelerators

AI accelerators are evolving beyond traditional GPU designs to better handle complex machine learning tasks. NVIDIA’s next-generation chips will likely improve upon their current Tensor Core technology to achieve faster matrix multiplications. These specialized cores are crucial for AI model training and inference.

AMD and Intel are investing heavily in their AI accelerator technologies to compete with NVIDIA’s dominance. Both companies are developing custom silicon specifically optimized for large language models and computer vision.

Emerging competitors like Graphcore and Cerebras are creating entirely new processor architectures designed from the ground up for AI workloads. Their innovative approaches include massive parallel processing capabilities and on-chip memory arrangements that minimize data movement.

Redesigning GPUs for Machine Learning Workloads

Future GPUs will feature architectures specifically tailored to machine learning rather than adapting graphics processing designs. Mixed-precision computing will become standard, allowing AI models to use different levels of numerical precision for different operations.

Memory bandwidth improvements will address the current bottlenecks in model training. New memory technologies like HBM3 (High Bandwidth Memory) will provide faster data access and reduce training times for large models.

Interconnect technologies will evolve to enable better scaling across multiple GPUs. This will make distributed training more efficient for massive AI systems that require hundreds or thousands of processors working together.

The lines between CPUs and GPUs may blur with the rise of heterogeneous computing, where specialized units for different AI tasks exist within a single chip package.

Sustainable AI with Energy-Efficient GPUs

Energy efficiency is becoming a critical focus as AI systems grow larger. Future GPUs will use advanced manufacturing processes (3nm and beyond) to reduce power consumption while increasing performance.

Smart power management features will allow GPUs to dynamically adjust their energy use based on workload demands. This includes selectively powering down unused portions of the chip during inference tasks.

Liquid cooling technologies will become more common for AI data centers, allowing GPUs to run at higher clock speeds without overheating.

Carbon-aware computing will influence GPU design, with chips that can adjust their performance based on the availability of renewable energy sources. This approach helps reduce the carbon footprint of large AI training runs.

Companies are already setting ambitious goals to develop GPUs that deliver significantly more AI performance per watt by 2025-2026.

Frequently Asked Questions

AI graphics processing is constantly evolving with new models and capabilities. GPU selection depends on your specific needs, budget constraints, and the scale of AI projects you’re working on.

What are the top-performing GPUs for deep learning and AI tasks in 2025?

The NVIDIA H100 leads the pack for high-end AI computing in 2025. This powerhouse GPU offers exceptional performance for complex deep learning models and large-scale AI projects.

The AMD INSTINCT MI300A has also proven to be a strong competitor, particularly for research institutions and enterprise applications that need massive parallel processing power.

For slightly less demanding but still professional AI work, the NVIDIA RTX 4080 and RTX 4090 provide excellent performance with their dedicated tensor cores.

Which GPUs offer the best value for AI research on a budget?

The NVIDIA GTX 3080-Ti remains a strong value option in 2025, offering 16GB of VRAM at a more accessible price point than newer models. This card handles most medium-sized AI models efficiently.

AMD’s mid-range offerings provide good alternatives for researchers with limited budgets. These cards may lack some NVIDIA-specific features but compensate with competitive pricing.

For entry-level AI research, previous generation cards like the NVIDIA RTX 3070 still perform adequately for smaller models and educational purposes.

How does the NVIDIA A100 compare to newer models for AI applications?

The NVIDIA A100, though released before the H100, remains relevant for many AI workloads. It offers 80GB of HBM2e memory and strong tensor performance that still meets the needs of most current AI applications.

Newer models like the H100 provide approximately 2-3x performance improvements over the A100 in specific AI tasks. However, the A100’s price has decreased, making it a compelling option for those who don’t need the absolute latest technology.

The A100’s architecture still supports major AI frameworks and libraries, ensuring compatibility with most current research applications.

What are the capabilities of the NVIDIA H100 in generative AI projects?

The NVIDIA H100 excels in generative AI with its fourth-generation Tensor Cores that dramatically speed up transformer-based models like those used in image generation and large language models.

For text-to-image models and diffusion models, the H100 can process iterations up to 3x faster than previous generation cards. This acceleration significantly reduces training and inference times for complex generative projects.

The H100’s 80GB of HBM3 memory allows it to handle larger batch sizes and more complex model architectures than most other available GPUs.

Can you recommend a GPU with high memory capacity for large AI datasets?

The NVIDIA H100 with its 80GB configuration stands out for handling extremely large datasets and models. Its high-bandwidth HBM3 memory provides both capacity and speed needed for data-intensive AI applications.

For those needing even more memory, multi-GPU setups with NVLink technology allow for effectively pooling memory resources across multiple cards.

The AMD INSTINCT MI250X with 128GB of memory (split across two dies) offers another high-memory alternative for specialized research applications requiring enormous dataset processing.

What are the considerations for choosing a GPU for AI gaming versus research?

AI research requires GPUs with high VRAM capacity and tensor processing capabilities. Memory bandwidth and size are often more important than raw gaming performance metrics.

For AI gaming applications, consumer-grade RTX cards often provide the best balance. They offer enough AI acceleration through DLSS and other gaming-focused AI features while maintaining high frame rates.

Thermal design and power consumption differ significantly between research and gaming GPUs. Research cards are optimized for sustained computational loads, while gaming cards balance performance with heat management for varying workloads.