Nvidia is making waves again with its newest AI chips. At the recent GTC 2025 conference, CEO Jensen Huang revealed two exciting additions to the company’s lineup: Blackwell Ultra and Vera Rubin. These powerful new chips represent a significant leap forward in AI computing capabilities, with Blackwell Ultra scheduled to ship in the second half of 2025 and Vera Rubin following in late 2026.

The Blackwell Ultra GB300 targets AI server businesses, continuing Nvidia’s dominance in the data center market. This isn’t just an incremental update – it’s positioned as a major advancement for companies building large AI systems. Huang’s announcement highlights Nvidia’s strategy of regular innovation to stay ahead of competitors.

Looking even further ahead, the Vera Rubin Ultra chip (slated for late 2027) promises to deliver computing power that’s 14 times greater than the current Blackwell architecture. This roadmap shows Nvidia’s commitment to pushing AI hardware boundaries for years to come, maintaining its position as the leading provider of chips powering the artificial intelligence revolution.

Blackwell Ultra GB300: Pushing AI Processing to New Heights

Nvidia’s new Blackwell Ultra GB300 represents a significant leap forward in AI hardware. Building on the already formidable Blackwell architecture, the Ultra version promises unmatched speed, efficiency, and memory bandwidth tailored specifically for AI workloads.

At its core, the GB300 features a dual-die design, offering a total of 20 petaflops of AI performance—double the output of the original Blackwell GB200. With a staggering 288GB of HBM3e memory, it caters to the ever-expanding size of AI models, making it a vital tool for large language models (LLMs), recommendation systems, and advanced data analytics.

Key Specifications: Blackwell Ultra GB300

| Feature | Blackwell GB200 | Blackwell Ultra GB300 |

|---|---|---|

| AI Performance (FP4) | 10 petaflops | 20 petaflops |

| Memory (HBM3e) | 192GB | 288GB |

| Compute Architecture | Blackwell | Blackwell Ultra |

| Release Year | 2024 | Late 2024 |

| Power Efficiency | Improved | 2x More Efficient |

This increase in computational power doesn’t come at the cost of efficiency. Nvidia claims the Blackwell Ultra is twice as energy efficient as its predecessor, addressing one of the major concerns in modern data center operations—power consumption.

AI Workloads That Benefit from GB300

- Large Language Models (LLMs): With massive memory bandwidth and low latency, training and inferencing become significantly faster.

- Recommendation Systems: Improves the speed and accuracy of systems used in e-commerce and social media platforms.

- Scientific Simulations: Enables complex calculations for climate modeling, drug discovery, and more.

Vera Rubin Architecture: The Next Generation of AI Superchips

Following Blackwell Ultra, Nvidia introduced its future roadmap with the Vera Rubin architecture, slated for release in 2026. This architecture is expected to usher in a new era of AI compute capabilities, specifically designed for the next wave of AI and machine learning models that demand even greater scale.

Named after the astronomer who confirmed the existence of dark matter, Vera Rubin represents a shift toward extreme scalability. Nvidia has revealed that the Rubin platform will deliver over 50 petaflops of AI performance in its standard version, with an Ultra variant already planned.

Vera Rubin and Rubin Ultra: A Glimpse Into the Future

| Generation | Performance (FP4) | Memory (HBM4) | Release Year |

|---|---|---|---|

| Vera Rubin | 50 petaflops | TBD | 2026 |

| Rubin Ultra | 100 petaflops | ~1.1TB | 2027 |

Nvidia’s CEO Jensen Huang described Rubin as the “engine” for a 100x increase in AI compute capability by the end of the decade. He also emphasized Rubin’s use of advanced packaging technologies and cutting-edge interconnects to facilitate faster data movement between chips, a critical factor in large-scale AI systems.

How Blackwell and Rubin Fit into Nvidia’s Data Center Vision

Nvidia’s announcements aren’t just about raw speed; they’re about rethinking the data center. Both Blackwell and Rubin are designed with Nvidia’s DGX SuperPOD architecture in mind. These supercomputers use NVLink and NVSwitch fabrics to enable massive GPU clusters that operate as a single, unified system.

DGX SuperPOD with Blackwell Ultra and Rubin

| Platform | Max GPUs | Network Interconnect | Peak AI Performance |

|---|---|---|---|

| Blackwell Ultra POD | 1024 | NVLink/NVSwitch | 20 Exaflops (AI FP4) |

| Rubin Ultra POD | TBD | Next-gen NVLink | Estimated 100 Exaflops |

These architectures allow enterprises to deploy data centers capable of running trillion-parameter models at scale, offering solutions for industries ranging from healthcare to autonomous vehicles.

What It Means for AI Developers and Enterprises

For AI developers, these advancements reduce the training time of large models from weeks to days—or even hours. Enterprises benefit from higher efficiency, potentially cutting data center costs by millions of dollars annually while reducing their carbon footprint.

Highlights for Enterprises

- Shorter AI Model Training Times: Accelerated time-to-market for AI applications.

- Energy Efficiency Gains: Reduced power consumption equals lower operational expenses.

- Scalability: Seamless scaling from small deployments to massive GPU clusters.

Jensen Huang’s Vision: AI at the Center of Everything

During his keynote, Jensen Huang outlined an ambitious vision: a world where AI accelerates everything. From drug discovery that takes months instead of years, to weather forecasting that can predict extreme events days in advance, Nvidia’s Blackwell and Rubin architectures are positioned to be the engines that power this future.

And with Rubin Ultra promising 100 petaflops of FP4 performance and nearly 1.1 terabytes of memory, the future of AI computing is not just powerful—it’s transformative.

Key Takeaways

- Nvidia’s new Blackwell Ultra chips will ship in the second half of 2025, followed by Vera Rubin chips in late 2026.

- CEO Jensen Huang positions these new chips as critical advancements for companies building next-generation AI systems.

- The Vera Rubin Ultra chip promises 14 times more computing power than current Blackwell architecture when it arrives in 2027.

Nvidia’s Blackwell Ultra and Rubin AI Chips Unveiled

Nvidia has revealed two groundbreaking AI chips that promise to reshape the landscape of artificial intelligence computing. These new processors represent significant leaps in performance and efficiency for AI model training and deployment.

Revolutionizing the GPU Landscape

Blackwell Ultra GB300 marks Nvidia’s latest advancement in AI chip technology. It delivers an impressive 20 petaflops of AI performance, matching the capabilities of the original Blackwell but in a more refined package. This chip is scheduled for release in the second half of 2025, positioning Nvidia to maintain its leadership in the AI hardware market.

The Vera Rubin chip represents the next generation beyond Blackwell. Expected to launch in late 2026, it builds upon Blackwell’s architecture with significant improvements. Rubin is designed to handle increasingly complex AI models and computational demands.

Both chips arrive at a critical moment as AI applications expand across industries. They address growing needs for processing power in data centers and AI research facilities worldwide.

Technical Specifications and Performance

The Blackwell Ultra maintains the 20 petaflops performance of its predecessor but likely with improved efficiency and thermal management. It represents a refined version of Nvidia’s current flagship AI processor.

Vera Rubin takes a substantial leap forward with 288GB of high-bandwidth memory 4 (HBM4), upgrading from the HBM3e found in Blackwell Ultra. This memory upgrade will significantly boost data processing speeds and model capacity.

Nvidia also announced new photonics switches alongside these chips. These switches offer:

- Greater power efficiency

- Improved signal integrity

- Enhanced network resiliency

These technical improvements will allow AI researchers and companies to build and deploy larger, more complex models with less hardware and energy consumption than previously possible.

Market Impact and Industry Applications

The announcement of these chips affirms Nvidia’s continued dominance in the AI hardware market. With Blackwell Ultra arriving in 2025 and Rubin in 2026, Nvidia has established a clear roadmap for its technology leadership.

These processors will power the next generation of AI applications across industries:

- Advanced language models

- Computer vision systems

- Scientific research

- Financial modeling

- Healthcare diagnostics

For enterprises building AI infrastructure, these chips promise better performance per watt and per dollar. This efficiency could accelerate AI adoption in cost-sensitive sectors and expand the practical applications of advanced AI systems.

The staggered release schedule gives developers and organizations time to plan their AI infrastructure investments while ensuring Nvidia maintains a competitive edge against rivals entering the AI chip market.

Integration with Artificial Intelligence

Nvidia’s new Blackwell Ultra and Rubin AI chips represent a significant leap forward in AI processing capabilities, designed specifically to handle the growing demands of complex artificial intelligence workloads.

Enhanced Machine Learning Capabilities

Blackwell Ultra chips bring major improvements to machine learning tasks. These new processors can handle larger AI models with better speed and less power use. The chips work especially well with the NVL72 rack server system that holds 72 GB300 superchips.

This setup helps data centers run more efficiently while training and using complex AI models. Companies won’t need as many servers to do the same work.

The upcoming Rubin AI chips (expected in late 2026) will push these capabilities even further. They’re designed to work with the next generation of AI software that needs more computing power.

Both chip designs focus on speeding up tasks like natural language processing and computer vision. This means AI systems can understand text, images, and speech more accurately and respond faster.

Real-World AI Applications and Advancements

The new chips open doors for practical AI uses across many industries. In healthcare, these processors can analyze medical images and patient data faster, potentially improving diagnosis speed and accuracy.

For autonomous vehicles, Blackwell Ultra provides the computing power needed to process sensor data in real-time, making self-driving cars safer and more reliable. The improved efficiency also means these systems can run on less energy.

In content creation, the chips enable more realistic graphics rendering and better video processing. This helps game developers and film studios create more detailed virtual worlds.

Financial institutions can use these chips to analyze market trends and detect fraud patterns more quickly. The speed improvements mean they can respond to threats almost instantly.

Scientists benefit too, as complex simulations for climate models, protein folding, and drug discovery can run much faster. This could speed up research in critical areas.

Nvidia at the Forefront of Innovation

Nvidia continues to push the boundaries of AI computing with its latest chip announcements at GTC 2025. The company’s relentless drive for innovation has established it as the leader in the high-performance computing market.

Contribution to High-Performance Computing

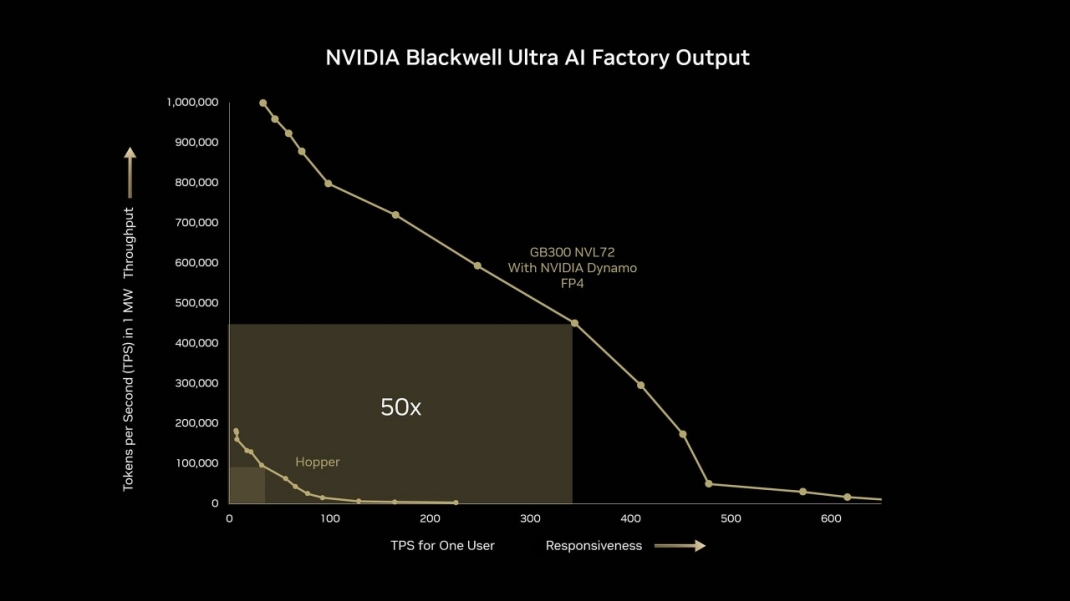

Nvidia’s new Blackwell Ultra chip represents a significant leap forward in AI processing capability. Building on the success of the original Blackwell architecture, this enhanced version delivers 1.5 times better performance while promising to drive a 50-fold increase in data center revenue. The chip features HBM4 memory technology, which provides faster data access and improved bandwidth.

These advancements enable AI researchers and companies to train larger models more efficiently. Many data centers are already planning to upgrade their systems to incorporate the Blackwell Ultra chips when they become available in the second half of 2025.

Jensen Huang, Nvidia’s CEO, highlighted how these chips will power the next generation of AI applications in healthcare, autonomous vehicles, and scientific research. The demand for Nvidia’s chips continues to grow as more industries adopt AI technologies.

Future Trends and Jensen Huang’s Vision

At GTC 2025, Jensen Huang unveiled Nvidia’s roadmap for AI computing, including the upcoming Vera Rubin chip set to launch in late 2026. This next-generation architecture promises to further accelerate AI capabilities beyond what Blackwell Ultra delivers.

The Rubin family will include specialized GPUs and other processors optimized for different AI workloads. This strategy reflects Huang’s vision of creating an entire ecosystem of AI computing rather than just standalone products.

Despite growing competition in the GPU space, Huang remains confident in Nvidia’s lead. He emphasized that Nvidia’s advantage comes not just from hardware but from its software ecosystem and developer tools that make its chips more accessible and useful.

Analysts note that Nvidia’s consistent innovation cycle allows companies to plan their AI strategies with more certainty, knowing that more powerful tools are on a predictable release schedule.

Frequently Asked Questions

Nvidia’s new AI chips represent significant technological advances with specific improvements in performance, energy efficiency, and AI capabilities. These chips are poised to transform various industries through their enhanced computing power.

What improvements do the Blackwell Ultra and Rubin AI chips offer over previous generations?

The Blackwell Ultra GPU delivers substantially better AI inferencing capabilities compared to previous generations. This means faster processing of AI models once they’re trained.

Rubin, Nvidia’s next-generation AI chip scheduled after Blackwell Ultra, will offer even greater performance improvements. The Rubin Ultra variant will effectively combine two Rubin GPUs, doubling performance to reach 100 petaflops.

These new chips continue Nvidia’s pattern of significant performance jumps between generations while improving energy efficiency.

What are the expected applications and use cases for the new Rubin and Blackwell chips?

These advanced chips will power next-generation AI models that require massive computational resources. They’re designed for data centers and enterprise applications that run complex AI workloads.

The improved performance makes them suitable for training and running larger language models, computer vision systems, and scientific simulations. Financial services, healthcare diagnostics, autonomous vehicle systems, and advanced research facilities will likely be primary adopters.

Cloud service providers will also implement these chips to offer enhanced AI capabilities to their customers.

How do the performance metrics of Blackwell Ultra compare to its competitors?

Blackwell Ultra represents Nvidia’s continued leadership in the AI chip space. While specific comparative benchmarks aren’t detailed in the search results, Nvidia has historically maintained performance advantages over competitors.

The chip focuses on AI inferencing, which suggests optimizations for running trained AI models efficiently. This addresses a critical industry need as deployment of AI systems grows.

Competitors like AMD and Intel have their own AI accelerators, but Nvidia’s software ecosystem and optimization tools give their hardware additional advantages beyond raw specifications.

What is the anticipated impact of Nvidia’s Blackwell Ultra and Rubin AI chips on the AI and machine learning industry?

These chips will enable more complex AI models to run efficiently, potentially accelerating advancements in natural language processing, image recognition, and other AI fields. This could lead to more capable AI assistants and automation tools.

The increased computing power may allow researchers to tackle previously unsolvable problems in fields like drug discovery, climate modeling, and materials science. Companies will be able to deploy more sophisticated AI systems at scale.

These chips further cement Nvidia’s position as a critical infrastructure provider for the AI industry, potentially influencing how AI development progresses in the coming years.

What are the differences between the new Blackwell Ultra and Rubin AI chips?

Blackwell Ultra is Nvidia’s next iteration after the current Blackwell architecture, scheduled for release in the second half of 2025. Rubin represents the following generation, expected to launch in late 2026.

Rubin will likely offer significant performance improvements over Blackwell Ultra, following Nvidia’s typical generational advances. The Rubin Ultra variant will double performance by connecting two Rubin GPUs, reaching 100 petaflops.

Each generation typically brings both architectural improvements and enhanced efficiency, though specific technical differences between the chips haven’t been fully detailed publicly.

When can consumers and enterprises expect the Rubin AI chip to be commercially available?

According to the search results, the Rubin AI chip is expected to launch in late 2026. This follows Nvidia’s roadmap where Blackwell Ultra will be available in the second half of 2025.

The Rubin Ultra variant, which combines two Rubin GPUs for double the performance, will likely follow the base Rubin release. Exact availability will depend on manufacturing capacity and initial production volumes.

Enterprise customers and data centers will likely get priority access, with wider availability expanding as production ramps up.