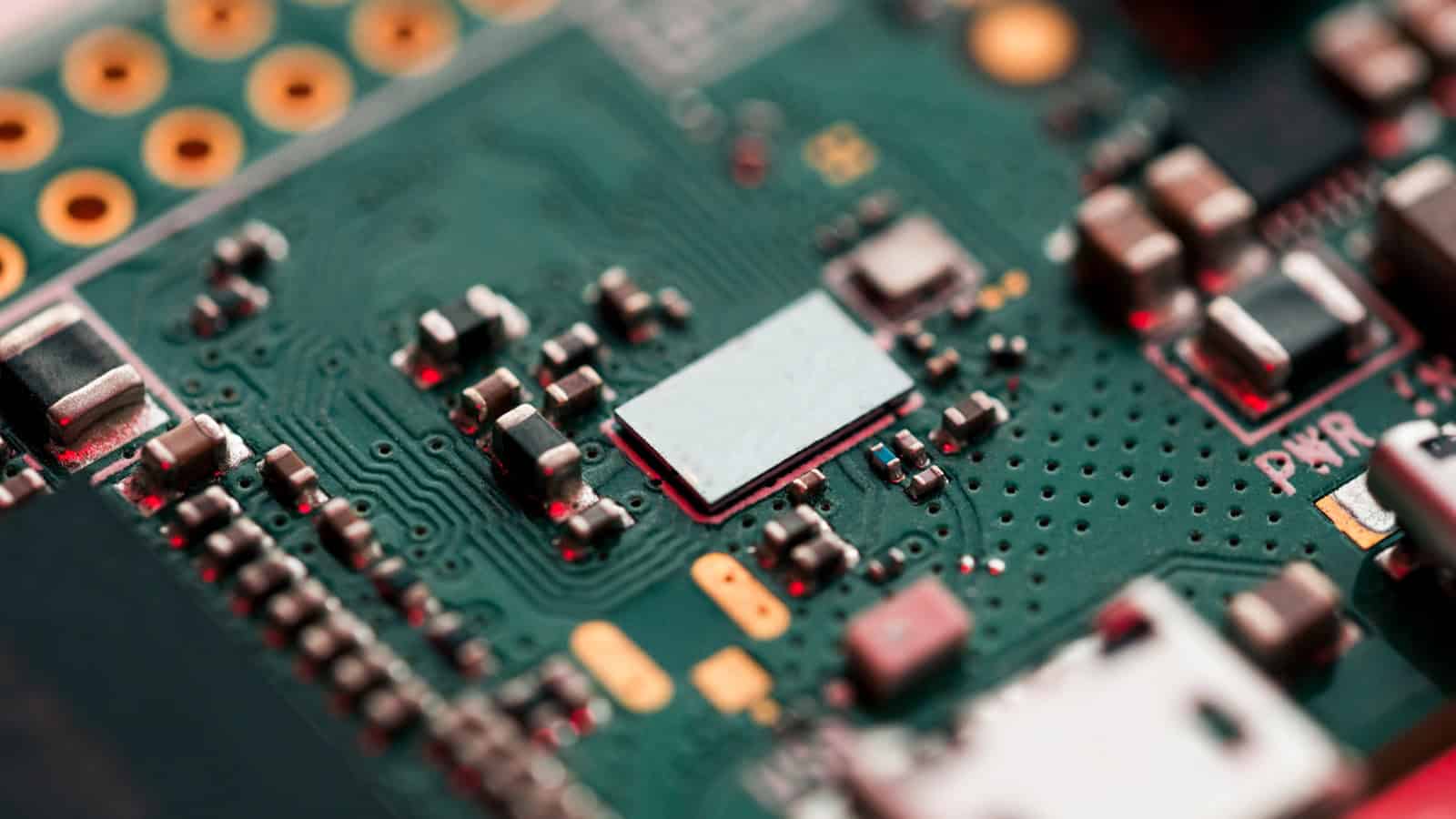

Cache is a high-speed data storage layer that keeps frequently accessed information readily available. It speeds up data retrieval and system performance by storing copies of data from main memory or external storage. Cache exists in various forms, from hardware-level caches inside CPUs to software caches in applications and web browsers.

Different types of cache serve distinct purposes. L1, L2, and L3 caches are integrated into processors, with L1 being the smallest and fastest. Disk caches buffer read and write operations to improve storage performance. Web browsers use caches to store website data locally, reducing load times on subsequent visits.

Cache management techniques optimize data storage and retrieval. These include direct mapping, set-associative mapping, and fully associative mapping. Each method balances speed, complexity, and resource usage to suit specific system requirements.

Unlocking the Speed: Understanding Cache and Its Types

Ever wondered how your computer accesses information so quickly? It’s not just about the processor’s speed. A lot of the magic happens thanks to something called “cache.” Think of cache as a super-fast, temporary storage area that holds the data your computer uses most often. This allows your CPU (central processing unit) to access that data much faster than if it had to retrieve it from the main memory (RAM).

Different Levels of Cache

Cache is organized in levels, each with its own speed and purpose. Here’s a breakdown of the most common cache levels:

- L1 Cache (Level 1): This is the smallest and fastest cache. It’s built directly into the CPU and stores the data the processor is most likely to need next. L1 cache is often split into two parts: one for instructions and one for data.

- L2 Cache (Level 2): L2 cache is larger and slightly slower than L1 cache. It acts as a buffer between L1 cache and the main memory. If the CPU can’t find the data it needs in L1 cache, it checks L2.

- L3 Cache (Level 3): This is the largest and slowest of the main cache levels. It’s often shared between multiple CPU cores and acts as a final layer of cache before accessing the main memory. L3 cache helps reduce the need for the CPU to access the slower main memory, improving overall performance.

Specialized Types of Cache

Beyond the main levels, there are other types of cache designed for specific purposes:

- 3D V-Cache: This is a newer type of cache that stacks cache memory vertically on top of the CPU. It dramatically increases the amount of L3 cache available, leading to significant performance gains in certain applications, especially gaming.

- GPU Cache: Graphics cards (GPUs) also have their own cache memory to store textures, geometry, and other data needed for rendering graphics. This helps speed up graphics processing and improve frame rates in games.

- Web Cache: Your web browser uses a cache to store website data like images, scripts, and stylesheets. This allows your browser to load websites faster on subsequent visits, as it doesn’t have to download everything again.

- Disk Cache: Hard drives and solid-state drives (SSDs) also have a built-in cache. This cache stores frequently accessed data from the drive, allowing for faster read and write speeds.

Cache Types: A Quick Summary

| Cache Type | Location | Speed | Purpose |

|---|---|---|---|

| L1 | CPU core | Fastest | Stores most frequently used data |

| L2 | CPU core or shared | Very fast | Buffer between L1 and main memory |

| L3 | Shared between cores | Fast | Last cache level before main memory |

| 3D V-Cache | Stacked on CPU | Fast | Dramatically increases L3 cache size |

| GPU Cache | Graphics card | Varies | Stores graphics data |

| Web Cache | Web browser | Varies | Stores website data |

| Disk Cache | Hard drive/SSD | Varies | Stores frequently accessed data from the drive |

Cache plays a crucial role in computer performance. By understanding the different types of cache and how they work, you can better appreciate how your devices access and process information so quickly.

Key Takeaways

- Cache speeds up data access by storing frequently used information

- Multiple cache types exist, from CPU-level to application-specific caches

- Effective cache management is crucial for optimizing system performance

Fundamentals of Cache

Have you ever wondered how your computer can access information so quickly? It’s not just about having a fast processor. There’s a secret ingredient called “cache” that plays a big role in speeding things up. Cache is like a special storage area that keeps the most important information close at hand, so your computer doesn’t have to go searching for it every time. Let’s explore what cache is and the different types that help your devices run smoothly.

Cache is a crucial component in modern computing systems. It improves performance by storing frequently accessed data for quick retrieval.

Defining Cache in Computing

Cache is a high-speed memory that stores recently used data and instructions. It sits between the CPU and main memory, reducing access times. The primary goal of cache is to bridge the speed gap between fast processors and slower main memory.

Cache operates on the principle of locality. This means data and instructions with addresses close to each other tend to be referenced closely together in time. By keeping this information readily available, cache significantly reduces latency.

The effectiveness of cache is measured by its hit ratio. A cache hit occurs when requested data is found in the cache. A cache miss happens when data must be fetched from slower memory.

Hierarchy and Types

Cache memory is organized in a hierarchy. This structure balances speed, size, and cost considerations.

L1 cache is the smallest and fastest. It’s typically split into separate instruction and data caches. L1 cache is integrated directly into the CPU core.

L2 cache is larger but slightly slower than L1. It may be shared among multiple CPU cores or dedicated to individual cores. L2 cache acts as a backup to L1, handling data that doesn’t fit in the primary cache.

L3 cache is the largest and slowest in the hierarchy. It’s shared among all cores in multi-core processors. L3 cache serves as a final on-chip cache before accessing main memory.

Other types of cache include:

- Instruction cache: Stores CPU instructions

- Data cache: Holds data for quick access

- Translation Lookaside Buffer (TLB): Caches virtual-to-physical address translations

These various cache types work together to optimize system performance and reduce memory latency.

Cache Implementation and Management

Cache implementation and management involve various techniques and policies to optimize data access and improve system performance. These strategies impact application performance and resource utilization across different computing environments.

Caching Techniques and Policies

Caching techniques include write-back and write-through policies. Write-back updates the cache but delays writing to main memory, while write-through updates both simultaneously. Cache mapping techniques determine how data is stored and retrieved.

Direct mapping assigns each memory block to a specific cache line. Fully associative mapping allows any block to be placed in any cache line. Set associative mapping combines these approaches.

Replacement policies decide which data to remove when the cache is full. The Least Recently Used (LRU) policy is common, removing the least accessed data first.

Cache line and block size affect performance. Larger blocks can improve spatial locality but may increase miss penalties.

Application and Performance Impact

Caching significantly improves application performance by reducing data access times. It leverages the principle of locality of reference, where recently used data is likely to be used again soon.

Faster data access leads to quicker response times and improved user experience. This is especially crucial for data-intensive applications and web services.

Caching enhances scalability by reducing the load on backend systems. It allows applications to handle more concurrent users without proportional increases in server resources.

However, cache management adds complexity to system design. Developers must consider data consistency, cache invalidation, and synchronization issues.

Browser and Web Caching

Web browsers implement local caches to store frequently accessed resources. This reduces network traffic and improves page load times.

Browser caches typically store HTML pages, images, and other static content. When a user revisits a website, the browser can load cached resources instead of requesting them from the server.

Web caching also occurs at various points in the network, including content delivery networks (CDNs) and proxy servers. These caches serve content closer to the user, reducing latency.

DNS caching stores recently resolved domain names, speeding up subsequent requests to the same websites.

Caching of Static Resources

Static resources like images, CSS files, and JavaScript are prime candidates for caching. They change infrequently and are often shared across multiple pages.

Content providers use cache headers to control how long resources should be cached. This balances freshness with performance benefits.

CDNs distribute static content across global networks of servers. This reduces the load on origin servers and improves access times for users worldwide.

Caching static resources reduces bandwidth usage and server load. It also improves website performance, especially for repeat visitors.

Proper cache management of static resources involves setting appropriate expiration times and implementing version control strategies to ensure users receive updated content when necessary.