Artificial Intelligence (AI) is revolutionizing industries worldwide, from healthcare and finance to gaming and automation. However, AI workloads are computationally demanding, requiring immense processing power. This is where AI accelerator cards come in—specialized hardware designed to supercharge AI applications, making tasks like machine learning, deep learning, and neural network training significantly faster and more efficient.

Unlike standard CPUs, which process tasks sequentially, AI accelerators are built for parallel computation, handling thousands of calculations at once. They reduce training times for AI models, improve inference speeds, and optimize power consumption.

Types of AI Accelerator Cards

AI accelerators come in different forms, each catering to specific AI workloads. The three most common types are GPUs (Graphics Processing Units), TPUs (Tensor Processing Units), and FPGAs (Field-Programmable Gate Arrays).

GPUs (Graphics Processing Units)

Originally designed for rendering graphics, GPUs have become a powerhouse for AI workloads due to their ability to process thousands of computations simultaneously. Their massively parallel architecture makes them ideal for training deep learning models.

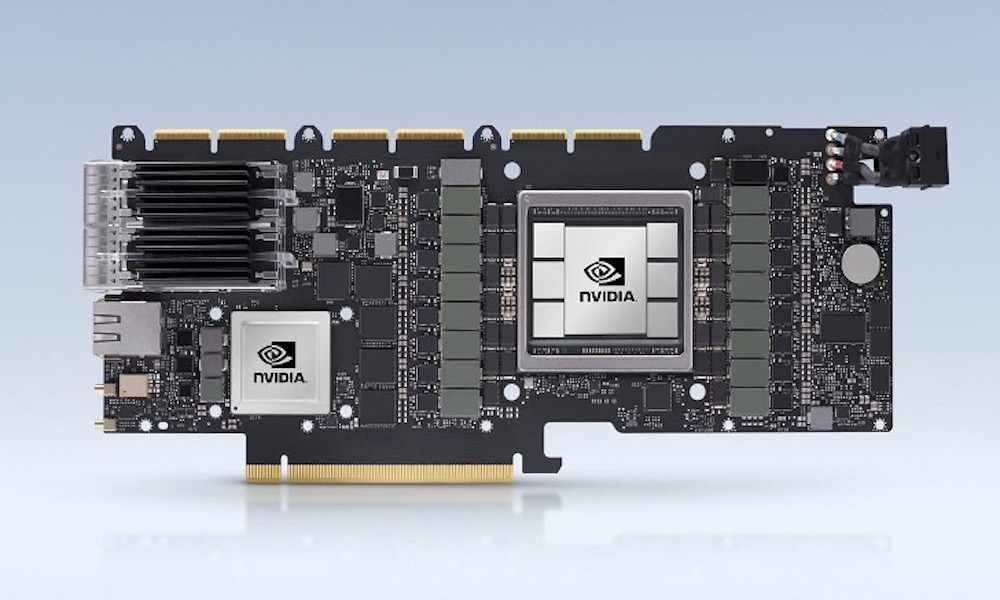

- NVIDIA dominates the AI GPU market with its RTX and Tesla series. The NVIDIA A100 is widely used in data centers, while the RTX 4090 offers a balance of AI and gaming performance for individual users.

- AMD’s Instinct MI250X is another strong contender, providing high computational power for AI workloads while supporting frameworks like TensorFlow and PyTorch.

TPUs (Tensor Processing Units)

Developed by Google, TPUs are engineered specifically for AI and machine learning tasks, focusing on matrix multiplications, which are fundamental in deep learning.

- Unlike GPUs, TPUs are not typically sold as standalone hardware. Instead, they are available through Google Cloud, making high-performance AI computing accessible without expensive upfront investments.

- The latest TPU v4 offers substantial performance improvements, excelling in both training and inference for deep learning models.

FPGAs (Field-Programmable Gate Arrays)

FPGAs provide programmable flexibility, allowing them to be customized for specific AI workloads. They are particularly valuable for low-latency inference in real-time AI applications.

- Intel’s Agilex FPGAs and Microsoft’s Azure FPGAs are widely used for AI acceleration, particularly in cloud services.

- Unlike GPUs and TPUs, FPGAs require specialized programming knowledge, making them less user-friendly but highly customizable for unique AI solutions.

Key Factors to Consider When Choosing an AI Accelerator

When selecting an AI accelerator, several crucial factors impact performance and efficiency:

Processing Power

AI accelerators are measured in TFLOPS (trillion floating-point operations per second). Higher TFLOPS ratings generally mean faster computations. For instance:

- NVIDIA A100 can achieve over 300 TFLOPS, making it a top choice for high-performance AI computing.

- Google TPU v4 is optimized for tensor calculations, outperforming many GPUs for specialized AI workloads.

Memory and Bandwidth

Memory plays a critical role in handling large datasets and complex AI models.

- High-end accelerators offer 24GB to 80GB of memory.

- Memory bandwidth, measured in GB/s or TB/s, affects how quickly data can be transferred between the processor and memory. Faster bandwidth ensures reduced bottlenecks.

Energy Efficiency

AI accelerators consume substantial power, especially for enterprise-scale applications.

- Power consumption varies from 36W (efficient edge AI accelerators) to 400W+ (high-end GPUs).

- Dynamic power scaling allows accelerators to adjust energy usage based on workload demands, improving efficiency.

Cost and Value

Pricing varies based on performance needs:

- Budget AI accelerators start at $500, suitable for hobbyists and small projects.

- Enterprise AI accelerators can exceed $10,000, offering unmatched performance for large-scale machine learning.

- Cloud AI acceleration (AWS, Google Cloud, Microsoft Azure) allows pay-as-you-go usage, reducing initial investment costs.

Best AI Accelerator Cards for Different Needs

To help you find the right AI accelerator, we’ve ranked the top options based on their performance, efficiency, and use case.

Final Thoughts

AI accelerator cards are essential for anyone serious about AI computing. Whether you’re training deep learning models, optimizing inference, or running AI at the edge, choosing the right accelerator can dramatically impact your workflow.

For high-performance AI training, NVIDIA’s A100 and Google’s TPU v4 remain dominant choices. For efficient edge AI applications, options like Seeed Studio Coral M.2 and Waveshare Hailo-8 provide outstanding performance with lower power consumption.

Before purchasing, consider your budget, workload type, power efficiency needs, and software compatibility. With the right AI accelerator, you can unlock new possibilities in machine learning and artificial intelligence.

Best AI Accelerator Cards

AI accelerator cards boost your system’s ability to run complex artificial intelligence tasks. These special cards handle the heavy math needed for AI workloads much faster than regular CPUs. Our list includes top options for different budgets and needs, from powerful enterprise-grade accelerators to more affordable choices for home use.

youyeetoo AI Accelerator Card

This PCIe-based AI accelerator delivers impressive edge computing power for businesses needing real-time AI processing without the cloud.

Pros

- Powerful 32TOPS processing with 8 Edge TPU processors

- Low power consumption (36-52 watts) compared to GPU solutions

- Supports multiple AI analytics running in parallel

Cons

- Requires TensorFlow Lite knowledge to utilize effectively

- Limited documentation available for beginners

- Higher price point than entry-level AI acceleration options

The youyeetoo AI Accelerator Card offers serious processing power for edge AI applications. With its PCIe Gen3 x16 interface, this card plugs into standard server and workstation slots to add dedicated AI processing capabilities. The 8 Google Coral Edge TPU processors work together to deliver up to 32 TOPS (Trillion Operations Per Second) of AI computing power.

What sets this card apart is its ability to handle AI processing locally without needing constant cloud connectivity. This makes it ideal for applications where privacy, security, or low latency are critical requirements. The card’s ASIC design specifically optimizes AI inference tasks rather than training, focusing resources on deployment efficiency.

Power efficiency is another strong point. The card consumes only 36-52 watts during operation, significantly less than GPU-based alternatives that often require 200+ watts. Twin turbofans keep the components cool during intensive processing while maintaining the compact PCIe card form factor. Companies looking to deploy multiple AI models simultaneously will appreciate the parallel processing capabilities built into the design.

Edge computing environments like manufacturing, retail analytics, and security monitoring can benefit from this accelerator. The card supports TensorFlow Lite, making it compatible with many existing AI models with minimal conversion work. For organizations already invested in the Google Coral ecosystem, this multi-TPU solution provides a natural scaling path for more demanding workloads.

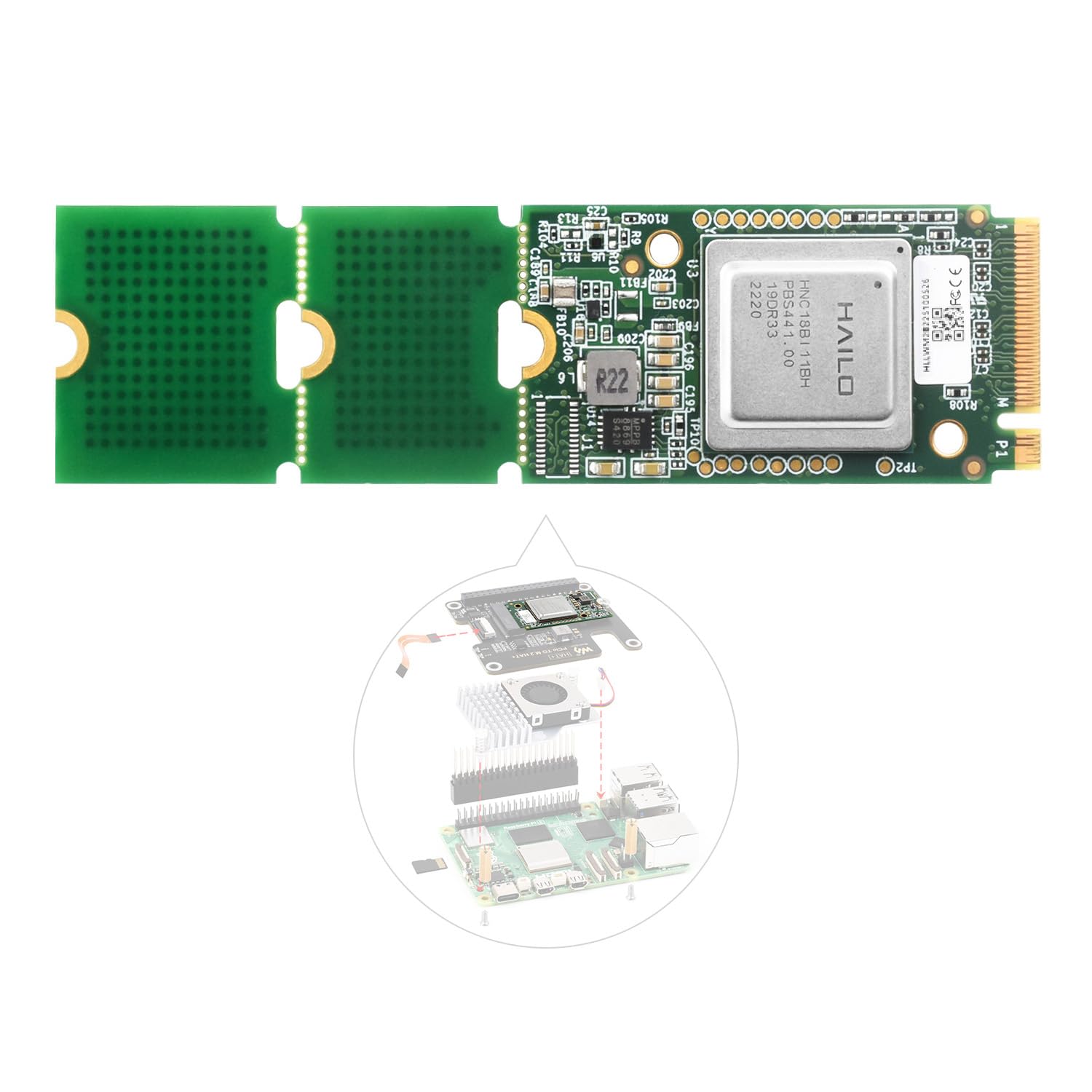

Seeed Studio Coral M.2 AI Accelerator

The Seeed Studio Coral M.2 Accelerator is a solid investment for developers who need fast, power-efficient AI inferencing at the edge without huge power demands.

Pros

- Impressive 4 TOPS performance with very low power usage

- Compatible with existing TensorFlow Lite models

- Works with any Debian-based Linux system

Cons

- Installation can be tricky on some systems

- Limited to TensorFlow Lite models only

- Not suitable for large AI models or LLMs

The Coral M.2 Accelerator brings powerful AI capabilities to edge devices. This tiny card packs Google’s Edge TPU technology, allowing it to run machine learning tasks locally instead of in the cloud. It fits into any compatible M.2 B+M key slot on your motherboard or single-board computer.

Speed is where this accelerator really shines. It can process machine vision models like MobileNet v2 at 400 frames per second while using minimal power. This makes it perfect for projects that need real-time image recognition or object detection without draining batteries or raising electricity bills.

Many users find this card valuable for home security systems and NVR (Network Video Recorder) setups. The accelerator offloads AI processing from the main CPU, making systems more stable and responsive. For developers already familiar with TensorFlow Lite, the transition is smooth since existing models can be compiled to run on the Edge TPU.

Setting up the card requires some technical knowledge. While it works with any Debian-based Linux system, installing drivers can sometimes be challenging. The card works best for specific use cases like computer vision rather than general AI tasks or large language models.

youyeetoo AI Accelerator Card

This AI accelerator card delivers impressive edge computing power for businesses that need real-time AI processing without cloud dependence.

Pros

- High performance with 8 Edge TPU processors supporting 32TOPS

- Low power consumption between 36-52 watts

- Compatible with TensorFlow Lite for easier implementation

Cons

- Requires PCIe Gen3 x16 slot which may not fit all systems

- Limited documentation beyond the manufacturer wiki

- Higher price point compared to basic graphics cards

The youyeetoo AI Accelerator Card brings Google Coral Edge TPU technology to desktop computers through a PCIe card format. Users get dedicated AI processing power without sending data to cloud servers. This makes it ideal for applications that need fast, private AI computing.

Twin turbo fans keep the card cool during operation. The thermal design helps maintain performance during extended AI processing tasks. With power consumption of just 36-52 watts, this card uses much less electricity than high-end GPUs while still handling complex AI tasks.

The card supports parallel AI analytics and model pipelining. This means users can run several AI models at once with low latency. For businesses working with computer vision, natural language processing, or other AI tasks, this accelerator offers a way to speed up operations without major system upgrades.

WayPonDEV AI Accelerator Card

The WayPonDEV PCIe Gen3 AI Accelerator offers Google Edge TPU technology for AI tasks, but its low customer rating suggests performance issues that make it hard to recommend for most users.

Pros

- Supports up to 8 Google Edge TPU modules for AI processing

- Compatible with standard PCIe Gen3 x16 slots for easy installation

- Features copper heatsink and twin fans for thermal management

Cons

- Very low customer satisfaction (2.9/5 stars)

- Limited customer feedback with only 2 total ratings

- Lacks software support documentation for new users

This AI accelerator card aims to bring edge computing power to desktop systems. The device leverages Google’s Edge TPU architecture to handle machine learning tasks locally without requiring cloud processing. Users can run TensorFlow Lite pre-trained models directly on the hardware, which could benefit developers working on AI applications.

Installation seems straightforward with the card fitting into standard PCIe Gen3 x16 slots. The cooling system includes copper heatsinks and twin turbofans, which should help manage heat during intensive processing tasks. This design choice appears important for maintaining performance when running multiple AI models simultaneously.

Customer feedback raises concerns about this product. With only two reviews and a below-average rating of 2.9 stars, potential buyers should proceed with caution. The specifications show the card works with both desktops and laptops with appropriate slots, but the limited real-world feedback makes it difficult to verify performance claims. For those needing AI acceleration capabilities, exploring options with more established user feedback might be safer.

Waveshare Hailo-8 AI Accelerator

This AI accelerator offers impressive computing power for edge devices but lacks essential accessories and documentation needed for proper setup.

Pros

- Powerful 26 TOPS AI processing with very low power consumption

- Compatible with multiple AI frameworks including TensorFlow and PyTorch

- Works with both Raspberry Pi 5 and other systems running Linux or Windows

Cons

- Ships without cooling solutions or heatsinks

- Minimal documentation included in the package

- Limited user reviews make reliability hard to assess

The Waveshare Hailo-8 M.2 AI Accelerator brings serious computing muscle to edge devices. With 26 Tera-Operations Per Second (TOPS) of processing power while using only 2.5W of power, it offers an efficient way to run AI models without cloud connectivity. This module fits the M.2 slot on a Raspberry Pi 5 or other compatible systems, making it accessible for many different projects.

Users can run multiple AI models at once on this accelerator. The device supports common frameworks like TensorFlow, PyTorch, and ONNX, which helps developers work with their existing code. Its wide operating temperature range (-40°C to 85°C) means it can function in harsh environments where other computing hardware might fail.

One major drawback is the bare-bones packaging. Customers report receiving just the module with no cooling solution or detailed setup instructions. Since AI accelerators can generate significant heat during operation, the lack of included heatsinks is concerning. Buyers should plan to purchase additional cooling components before using this device for serious workloads.

AI enthusiasts looking for edge computing solutions might appreciate the Hailo-8’s capabilities, but should be prepared to do extra research. Waveshare mentions “rich Wiki resources” but customers will need to contact the company directly to access these materials. This product works best for those with technical experience who don’t mind some additional setup challenges.

SmartFly AI Accelerator Card

This AI accelerator card offers powerful edge computing capabilities for professionals who need fast AI processing without the cloud.

Pros

- Supports up to 16 Google Edge TPU modules for impressive inference power

- Works with pre-trained TensorFlow Lite models out of the box

- Includes effective cooling with copper heatsink and dual fans

Cons

- Higher price point than some competing accelerators

- Limited to TensorFlow Lite framework

- Requires PCIe Gen3 x16 slot which may not be available in all systems

The SmartFly PCIe Gen3 AI Accelerator brings Google’s Coral Edge TPU technology to standard desktop computers. This card plugs into a regular PCIe slot and adds substantial AI processing power. Users can run machine learning models directly on this hardware instead of sending data to the cloud.

Setup is straightforward for those familiar with TensorFlow. The card works best with Google’s pre-trained models, which can be easily loaded and run. This makes it useful for businesses that need quick AI inference without programming expertise.

Cooling matters for AI hardware, and SmartFly addressed this well. The card features a copper heatsink and two fans that keep temperatures in check even during heavy workloads. It fits standard PCIe Gen3 x16 slots found in most desktop computers and workstations from the last several years.

This accelerator shines in applications like computer vision, natural language processing, and other AI tasks that benefit from dedicated hardware. Its main advantage is processing data locally without internet connectivity. This approach improves both speed and privacy compared to cloud-based solutions.

Intel QuickAssist Adapter

The Intel QuickAssist Accelerator Card offers specialized computing power for businesses needing encryption and compression performance in a compact, renewed package.

Pros

- Low-profile design fits in space-constrained servers

- PCI Express 3.0 interface ensures wide system compatibility

- Offloads CPU-intensive tasks for better overall system performance

Cons

- Renewed product may have shorter lifespan than new alternatives

- Limited information available about performance specifications

- May require specific software configuration for optimal use

This Intel QuickAssist Adapter 8970 works as a specialized tool for businesses handling encryption and data compression tasks. It plugs into standard PCI Express slots and takes over these demanding jobs from the main processor. Users can expect smoother system operation when dealing with secure communications or large data sets.

The card’s low-profile design makes it valuable for smaller servers where space matters. Many data centers need to pack computing power into tight spaces, and this adapter meets those requirements. The renewed status of this product offers a more affordable entry point for organizations looking to boost their capabilities without paying full price.

System administrators should note that this accelerator works best with compatible Intel systems. The adapter handles specific workloads rather than general computing tasks. Businesses processing large amounts of encrypted traffic or compressing significant data volumes will see the most benefit from adding this specialized hardware to their systems.

HP Fusion ioFX Accelerator

The HP Fusion ioFX accelerator card offers significant performance improvements for video professionals, though its older technology limits its appeal for current workflows.

Pros

- Speeds up video rendering and playback significantly

- Large 410GB storage capacity for working files

- PCIe interface for direct motherboard connection

Cons

- Uses older technology compared to newer AI accelerators

- Limited compatibility with current software

- Higher power consumption than newer alternatives

The HP Fusion ioFX functions as a specialized PCIe card designed to boost performance in video editing and rendering tasks. With 410GB of onboard memory, it allows editors to store working files directly on the card, reducing the data transfer bottlenecks that slow down production workflows. This approach works differently from modern GPU acceleration, focusing instead on storage speed and direct data access.

Video professionals working with high-resolution content will notice the card’s impact on tasks like playback, compositing, and transcoding. The acceleration happens because the system can access footage without the delays of traditional storage. For studios still using compatible software from the 2014-2015 era, this card may extend the useful life of older workstations without requiring a complete system upgrade.

The card’s age does present some limitations. Released in 2014, the technology predates many current editing applications and may lack driver support for newer operating systems. Modern AI accelerators focus more on computational power rather than the storage-based approach of the Fusion ioFX. Those working with current software packages might find greater benefits from newer GPU or specialized AI accelerator solutions that better support today’s demanding workloads.

Netgate QuickAssist Cryptographic Card

The Netgate Cryptographic Accelerator Card delivers impressive performance for businesses needing faster encryption processing and secure connections.

Pros

- Provides up to 50Gbps of hardware acceleration

- Dramatically improves TLS performance (3.5x faster than unaccelerated servers)

- Works with most PC, server, and workstation platforms through standard PCIe interface

Cons

- Higher price point than software-only solutions

- Requires signature on delivery which may delay installation

- Limited use cases for average consumers

The Intel “Coleto Creek” powered CPIC-8955 card fits into a PCIe slot and takes over the heavy lifting for encryption tasks. With its QuickAssist technology, the card reaches 50Gbps throughput for cryptographic operations. This makes a big difference for servers handling lots of secure connections or processing encrypted data.

Businesses running web services on NGINX or using OpenSSL will see the biggest benefits. The card handles complex math problems like RSA decryption extremely fast—up to 170,000 operations per second with 1k-bit keys. Server admins will appreciate the reduced CPU load when the card handles these tasks instead of the main processor.

The accelerator also helps with data compression at speeds up to 20Gbps. This feature saves storage space and speeds up data transfers. Companies working with large datasets or running virtualized environments will find this particularly useful. The card supports Single Root I/O Virtualization, making it work well in virtual machine setups.

Installation is straightforward for IT professionals. The card uses a PCIe Gen2x16 interface and works with most server platforms. Businesses concerned about data security and connection speeds should consider this specialized hardware, especially when running high-traffic secure services or working with encrypted storage.

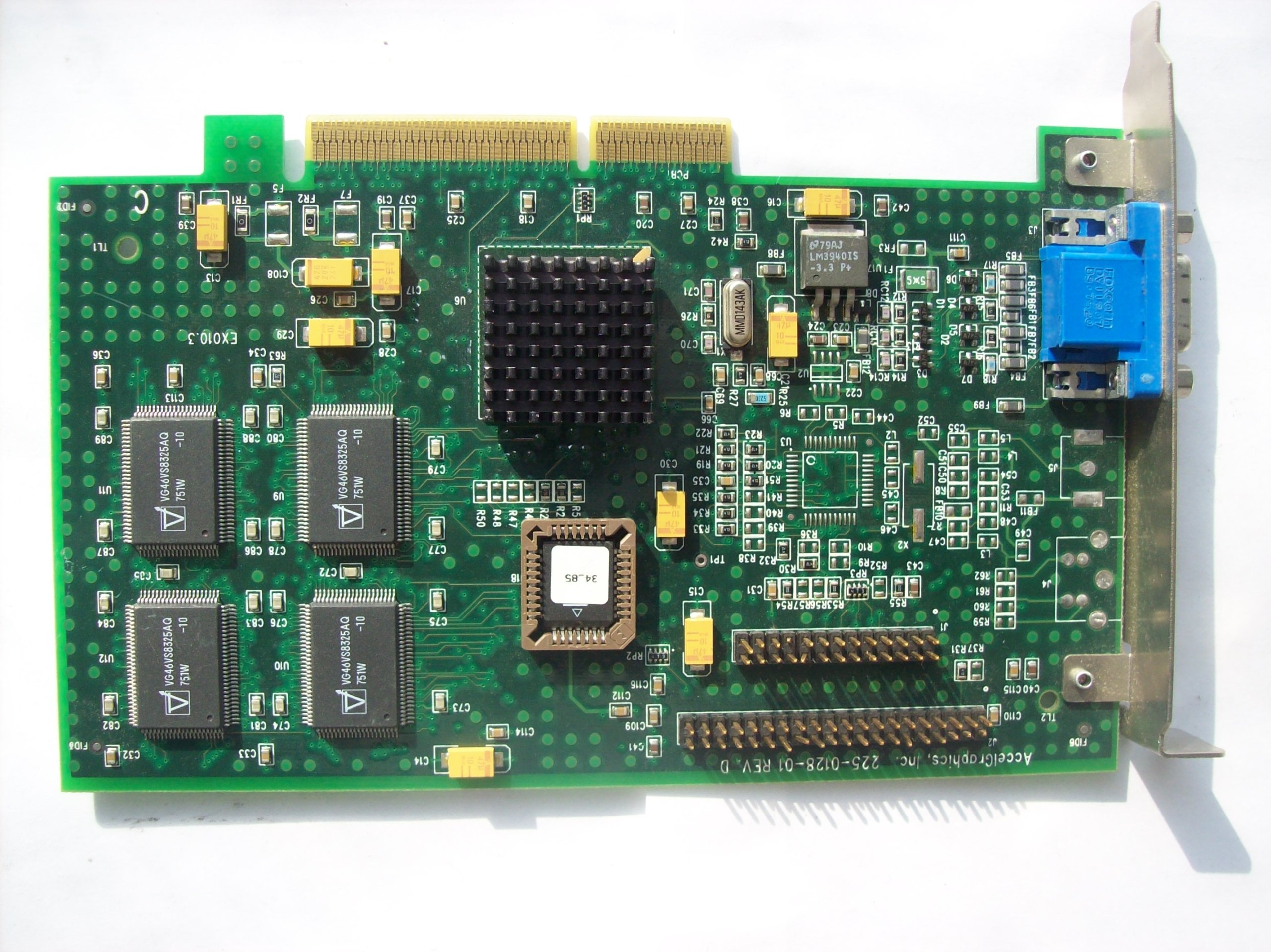

AccelGraphics AGP Video Card

This legacy AGP video card offers a simple solution for older desktop systems needing a basic graphics replacement with included warranty protection.

Pros

- Includes warranty coverage

- Compatible with AGP interface systems

- Lightweight design at only 1 pound

Cons

- Very dated technology for modern computing

- Limited 8MB memory capacity

- Minimal customer feedback available

The AccelGraphics 225-0128-01 functions as a replacement video card specifically designed for older desktop computers with AGP slots. Released in 2010, this card represents technology from an earlier computing era. Users with legacy systems may find this product valuable when needing to replace damaged graphics hardware.

AGP interface technology predates the modern PCI Express standard found in contemporary computers. The card features AMD graphics processing technology with 8MB of memory. Though modest by today’s standards, this specification would handle basic display functions for vintage systems where compatibility rather than performance is the priority.

Warranty coverage provides some peace of mind for buyers investing in older technology. The product maintains a perfect 5-star rating, though this comes from just a single review. For collectors maintaining vintage systems or for specialized industrial computers that require exact replacement parts, this AccelGraphics card fills a specific niche that newer graphics cards cannot serve.

NVIDIA Tesla V100 GPU Accelerator

The NVIDIA Tesla V100 offers powerful AI acceleration capabilities for serious deep learning and high-performance computing tasks at a competitive price point.

Pros

- 16GB high-bandwidth memory for handling large AI models

- PCI Express interface for easy installation in compatible systems

- Designed specifically for machine learning and HPC workloads

Cons

- May require additional cooling solutions

- Significant power requirements compared to consumer GPUs

- Limited value for non-AI or computational workloads

The Tesla V100 stands out in the AI accelerator market with its Volta architecture. This card packs 16GB of memory, making it suitable for training complex neural networks and running inference on large datasets. Users looking to speed up machine learning projects will find this card handles tensor operations efficiently.

CG CHIPS GATE offers this professional-grade GPU without any handling fees. The company focuses on product quality rather than marketing, which helps keep costs down. For bulk orders, they consider reasonable offers and respond quickly to inquiries.

Setup is straightforward with the standard PCI Express interface. The card works well for deep learning frameworks like TensorFlow and PyTorch. Some systems may need power supply upgrades to accommodate the card’s requirements. The seller provides secure packaging and accepts returns if customers aren’t satisfied.

For organizations running AI research or data centers needing computational power, this accelerator delivers good performance. The Tesla V100 represents NVIDIA’s dedicated approach to AI computing with specialized tensor cores. Buyers should check their system compatibility before purchase to ensure proper fit and function.

TUOPUONE Hailo-8 AI Accelerator

The TUOPUONE Hailo-8 AI Accelerator offers excellent performance for edge AI applications with its powerful 26 TOPS processor while maintaining low power consumption.

Pros

- Impressive 26 TOPS processing power with minimal 2.5W typical power consumption

- Compatible with multiple AI frameworks including TensorFlow, PyTorch, and ONNX

- Includes comprehensive accessory bundle with camera, cooling fan, and memory card

Cons

- Higher price point compared to basic AI modules

- Requires technical knowledge to fully utilize its capabilities

- Limited documentation for beginners

This M.2 AI accelerator module stands out in the edge computing market. The Hailo-8 processor delivers 26 trillion operations per second while drawing only 2.5W of power in typical use cases. This balance makes it ideal for running complex AI models without requiring massive power supplies.

Compatibility is a major strength of this device. It works with popular AI frameworks like TensorFlow, PyTorch, and ONNX. The module supports both Windows and Linux operating systems, giving developers flexibility in their choice of development environment. Its wide operating temperature range from -40°C to 85°C allows for deployment in various environmental conditions.

The package includes everything needed to get started. Buyers receive the Hailo-8 M.2 module, PCIe adapter board, a Raspberry Pi camera module, 64GB memory card, and cooling solutions. The PCIe to M.2 adapter includes power monitoring capabilities for stable operation. Its design features a reserved airflow vent for installing the included cooling fan, which helps maintain optimal performance during intensive processing tasks.

Ayzung NVIDIA Tesla K80 GPU Accelerator

The Ayzung NVIDIA Tesla K80 24GB GPU accelerator offers powerful computing capabilities for AI workloads at a reasonable price point for serious developers.

Pros

- Substantial 24GB GDDR6X memory capacity

- Multiple video output options (DisplayPort, HDMI, DVI)

- Compatible with many computing applications

Cons

- Older Tesla architecture compared to newer models

- Can generate significant heat during intensive tasks

- Requires robust power supply

This accelerator card serves as a solid entry point for those wanting to dive into AI development. The Tesla K80 architecture, while not the newest on the market, still delivers reliable performance for many machine learning tasks and computational workloads.

Users will find the 24GB memory particularly helpful when working with larger datasets or complex models. The memory buffer allows AI applications to run smoothly without frequent swapping to system memory. Multiple video output interfaces also provide flexibility for different setup configurations.

Power considerations remain important with this card. The device needs adequate cooling and a strong power supply to maintain stability during extended AI training sessions. For businesses or individuals starting their journey into AI acceleration, this Ayzung model balances cost with capability.

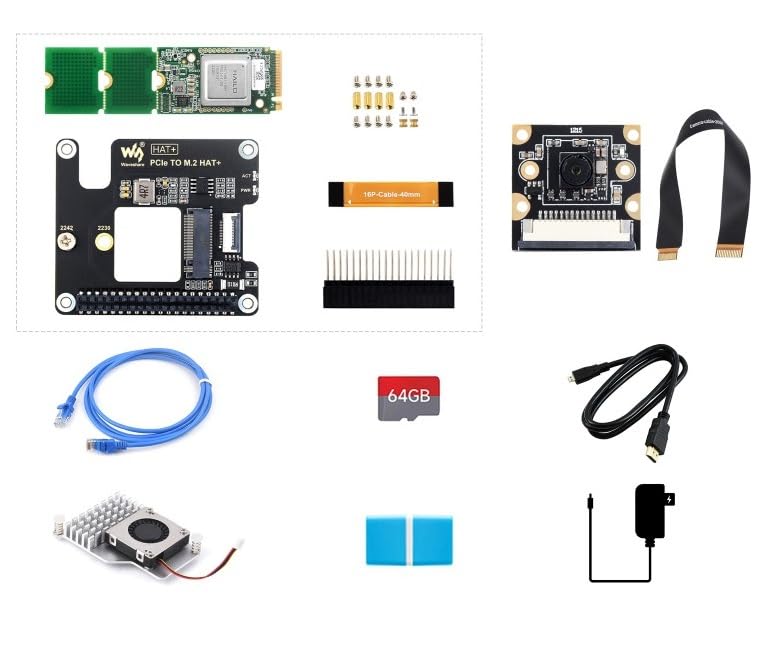

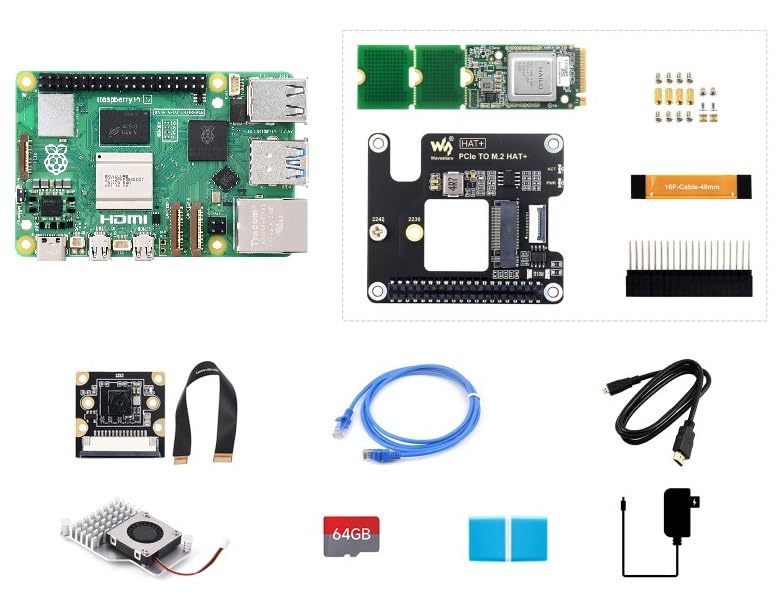

TUOPUONE Raspberry Pi 5 AI Kit

This complete AI development kit offers high-performance edge computing with its powerful Hailo-8 accelerator, making it ideal for hobbyists and professionals working on AI projects.

Pros

- 26 TOPS computing power with minimal power consumption

- Complete package includes all necessary accessories

- Supports multiple AI frameworks and operating systems

Cons

- Only 2GB RAM might limit some advanced applications

- Requires technical knowledge to fully utilize

- Higher price point compared to basic Raspberry Pi setups

The TUOPUONE Raspberry Pi 5 AI Kit combines the latest Pi 5 board with a powerful Hailo-8 AI accelerator module. This combination delivers impressive 26 TOPS (Tera-Operations Per Second) of computing power while consuming only 2.5W of power. Users can run complex AI models and process multiple data streams at once with this setup.

Everything needed comes in the box. The kit includes the Pi 5 board, AI accelerator, camera module, power supply, memory card, and necessary cables. This saves time and money compared to buying components separately. The included 8MP IMX219-77 camera makes vision-based AI projects easy to start right away.

The Hailo-8 accelerator supports popular AI frameworks like TensorFlow, PyTorch, and ONNX. This flexibility lets developers use their preferred tools without compatibility issues. The wide operating temperature range from -40°C to 85°C also makes this system suitable for various environments, from indoor projects to industrial applications.

Connection options are plentiful with this kit. It features dual USB 3.0 ports, Gigabit Ethernet, dual-band Wi-Fi, and Bluetooth 5.0. The PCIe interface gives additional expansion possibilities through the included M.2 adapter. Dual 4K HDMI outputs allow for sophisticated display setups when needed.

Buying Guide

Choosing the right AI accelerator card means balancing your needs with your budget. Not every feature matters for every user, and some cards excel in specific tasks.

Performance Requirements

First, identify your AI workload. Different cards handle tasks like machine learning training, inference, and computer vision with varying efficiency.

Key Performance Metrics:

- Compute power (TFLOPS)

- Memory capacity and bandwidth

- Power consumption

- Model size support

Form Factor Considerations

AI accelerator cards come in several physical formats to fit different systems.

| Form Factor | Best For | Considerations |

|---|---|---|

| PCIe Cards | Desktops, servers | Requires PCIe slots, good cooling |

| Compact modules | Edge devices, small systems | Lower power, less performance |

| Rack-mounted | Data centers | Higher cost, scalable |

Memory and Bandwidth

Memory affects how large an AI model you can run. More memory lets you work with complex models without performance hits.

Bandwidth determines how quickly data moves between memory and processing units. This becomes crucial for real-time applications.

Software Ecosystem

Check compatibility with your preferred AI frameworks. Many accelerators work best with specific software stacks.

Popular frameworks to consider:

- TensorFlow

- PyTorch

- ONNX

Cooling and Power

AI accelerators generate significant heat when running complex workloads. Make sure your cooling solution can handle the card’s thermal output.

Power requirements vary widely. Factor in your system’s power supply capacity and energy costs for continuous operation.

Frequently Asked Questions

AI accelerator cards have become essential components in modern computing systems designed for machine learning and deep learning tasks. These specialized hardware solutions offer unique advantages in specific use cases.

What are the benefits of using an AI accelerator over a traditional GPU?

AI accelerators provide significantly higher performance for machine learning tasks compared to traditional GPUs. They are specifically designed to handle matrix multiplications and other operations common in AI workloads.

Power efficiency is another major benefit. AI accelerators can process more operations per watt, reducing energy costs in data centers.

Many AI accelerators include specialized memory architectures that minimize data movement, which is often a bottleneck in AI computations.

How do AI accelerator cards compare in terms of performance and cost?

Performance varies widely across different AI accelerator cards. NVIDIA’s A100 and H100 cards offer exceptional performance but at premium prices, while offerings from companies like Habana Labs provide competitive performance at lower costs.

The performance-per-dollar ratio has improved dramatically in recent generations. New entrants like Graphcore and Cerebras have created alternative architectures that excel at specific workloads.

Cloud providers now offer access to various AI accelerators through their services, allowing users to test different options without large upfront investments.

Which companies are leading in the production of AI accelerator cards?

NVIDIA remains the dominant player with their GPU-based accelerators that have evolved to include tensor cores and other AI-specific features. Their market share in AI training hardware exceeds 80%.

Google has developed multiple generations of Tensor Processing Units (TPUs), which are used primarily in their cloud services and internal AI projects.

Intel acquired Habana Labs and now offers Gaudi accelerators for training and Goya accelerators for inference workloads.

Other notable players include AMD with their Instinct line, Graphcore with their Intelligence Processing Units, and Cerebras with their wafer-scale engine technology.

Can you explain the primary functions of AI accelerator cards in machine learning workloads?

AI accelerator cards excel at parallel processing of mathematical operations, particularly matrix multiplications that form the core of deep learning models. This parallelization dramatically speeds up both training and inference.

These cards handle tensor operations efficiently through specialized hardware units. Modern AI accelerators often include dedicated tensor cores that can perform multiple multiply-accumulate operations simultaneously.

Memory management is another critical function. AI accelerators include high-bandwidth memory closely integrated with processing units to minimize data transfer delays.

How does the integration of AI accelerator cards affect the overall system architecture?

AI accelerators typically connect to host systems via PCIe interfaces, though some newer designs use more advanced interconnects. This connection can become a bottleneck in data-intensive applications.

System cooling requirements increase significantly with AI accelerators. Many high-end cards require liquid cooling solutions to maintain optimal performance.

Power delivery systems often need upgrades to support AI accelerator cards. A single high-performance card may require 300-400 watts or more under full load.

Software stacks must be optimized to leverage accelerator capabilities. This includes specialized drivers, libraries, and sometimes completely different programming models.

What should be considered when choosing an AI accelerator card for specific applications?

Workload characteristics should drive selection decisions. Training large models requires different hardware than running inference on already-trained models.

Memory capacity is crucial for handling large models. Some recent language models require dozens of gigabytes of memory, limiting the hardware options.

Software compatibility cannot be overlooked. The best hardware is useless if your software stack doesn’t support it effectively.

Total cost of ownership includes not just the purchase price but also power consumption, cooling requirements, and potential software development costs for optimization.