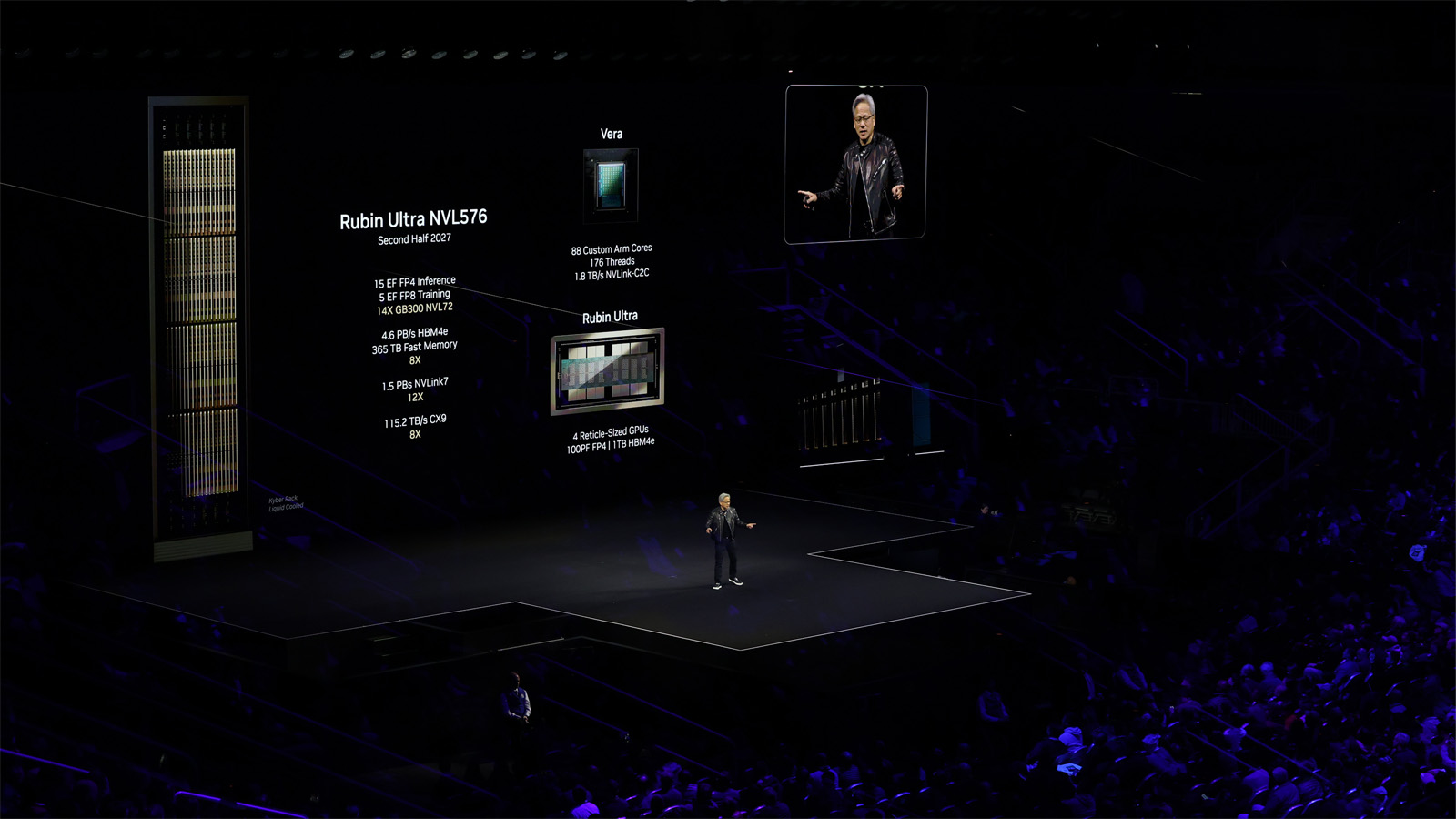

NVIDIA has just revealed its next generation of AI computing technology at GTC 2025 – the Rubin Ultra with NVL576 rack configuration. This powerful system will follow the standard Rubin GPU and is planned for release in the second half of 2027. The Rubin Ultra NVL576 rack is expected to deliver an impressive 15 exaflops of FP4 inference and 5 exaflops of FP8 training performance, which NVIDIA claims is 14 times more powerful than current solutions.

The company showed a mockup of these future systems at their GPU Technology Conference, highlighting the unique “Kyber Rack” liquid cooling design that will support up to 600 kilowatts of power. Each rack will connect 576 Rubin GPUs using advanced HBM4 memory, pushing the boundaries of what’s possible in AI computation. NVIDIA’s two-year roadmap demonstrates their continued push to advance AI hardware capabilities well into the future.

Rubin Ultra NVL576: A 600kW Beast Built for the AI Future

At GTC 2025, NVIDIA pulled the curtain back on what might be the most audacious leap in AI computing we’ve seen yet—the Rubin Ultra platform, powered by the massive NVL576 rack-scale system. This isn’t just another GPU announcement; it’s a bold reimagining of what AI data centers will look like by the time we hit the back half of this decade.

Let’s break it down: the NVL576 architecture connects a staggering 576 Rubin Ultra GPUs—paired with Vera CPUs—using NVIDIA’s next-gen NVLink. That’s right, NVLink is now in its seventh generation, and the improvements in bandwidth and coherence between GPUs and CPUs are game-changing. We’re talking about a system designed from the ground up to scale across racks, not just boards or blades. This is high-performance computing on a whole new tier.

What Kind of Performance Are We Looking At?

NVIDIA is promising up to 15 exaflops of FP4 inference performance. Let that number sink in. In terms of AI workloads, this means faster training times, real-time inference on models once considered too large, and the ability to run multiple massive LLMs simultaneously in production environments.

On the training side, Rubin Ultra NVL576 delivers up to 5 exaflops of FP8 performance. That’s 14x the performance of the already-massive GB300 NVL72, which was announced alongside the Blackwell chips. Simply put, Rubin Ultra isn’t just scaling up—it’s redefining the scale.

Liquid Cooling, 600kW Racks, and Data Center Implications

With great power comes… a whole lot of heat. Each Rubin Ultra rack will draw around 600 kilowatts of power, which is more than double the energy demands of NVIDIA’s prior supercomputing racks. To handle that thermal load, the NVL576 racks are entirely liquid-cooled, signaling a broader industry shift where air cooling just won’t cut it anymore for top-tier AI infrastructure.

Data centers planning to adopt Rubin Ultra are going to need to rethink their power and cooling strategies. This isn’t a plug-and-play upgrade—it’s a massive investment into the future of AI computing.

Meet Vera: Rubin’s CPU Sidekick

Backing up those 576 GPUs are NVIDIA’s new Vera CPUs, which also debut with the Rubin Ultra platform. These CPUs are based on Arm architecture and tuned specifically for AI and high-throughput environments. They act as the glue between Rubin GPUs, coordinating workflows and maintaining high-speed coherence across the system via NVLink.

The combination of Rubin GPUs and Vera CPUs is meant to offload more AI-related compute directly to the GPU fabric, while Vera manages orchestration, scheduling, and high-speed data movement without creating bottlenecks.

Designed for the Era of LLMs and AGI

Let’s be real—this isn’t for your average machine learning project. Rubin Ultra is purpose-built for the mega-scale AI workloads that define this new era: generative AI models with trillions of parameters, real-time AI agents, foundation models serving millions of users simultaneously, and edge-AI aggregation at planetary scale.

It’s NVIDIA’s clearest signal yet that the future of AI infrastructure isn’t just about faster chips—it’s about AI factories. Rubin Ultra racks are essentially self-contained AI power plants, capable of delivering performance once reserved for entire data centers, all in a single rack-sized footprint.

When Will It Be Available?

NVIDIA has its eyes on a 2027 release window for Rubin Ultra and the NVL576 platform. That’s ambitious but gives cloud providers, hyperscalers, and enterprises time to prepare for the next big leap in compute infrastructure. Before that, in 2026, NVIDIA plans to roll out Vera Rubin NVL144, a smaller sibling with 144 Rubin GPUs, delivering 3.6 exaflops of FP4 and 1.2 exaflops of FP8.

Think of NVL144 as the stepping stone—a preview of Rubin’s architectural power—before Rubin Ultra arrives to completely rewrite the rules of AI compute.

The Bigger Picture

The Rubin Ultra NVL576 isn’t just another GPU rack—it’s the next rung on the AI evolution ladder. In many ways, it’s a response to the exploding demands of LLMs, diffusion models, and general-purpose AI that need way more performance than any current system can deliver. NVIDIA is betting big on where things are headed, and with Rubin Ultra, they’re building a system that can keep up with the vision.

And if the past decade has taught us anything, it’s that when NVIDIA places a bet like this—it’s usually right.

Key Takeaways

- The Rubin Ultra NVL576 system offers 15 exaflops of inference performance, representing a 14x improvement over current AI computing solutions.

- NVIDIA plans to release the liquid-cooled “Kyber Rack” system in the second half of 2027, following next year’s standard Rubin GPU release.

- Each 600kW NVL576 rack will connect 576 Rubin GPUs with HBM4 memory, significantly advancing data center AI computing capabilities.

Overview of NVIDIA Rubin Ultra

NVIDIA’s Rubin Ultra represents a significant leap forward in AI computing capabilities, featuring unprecedented processing power and innovative rack configurations designed for advanced AI workloads.

Introducing the Rubin Ultra NVL576

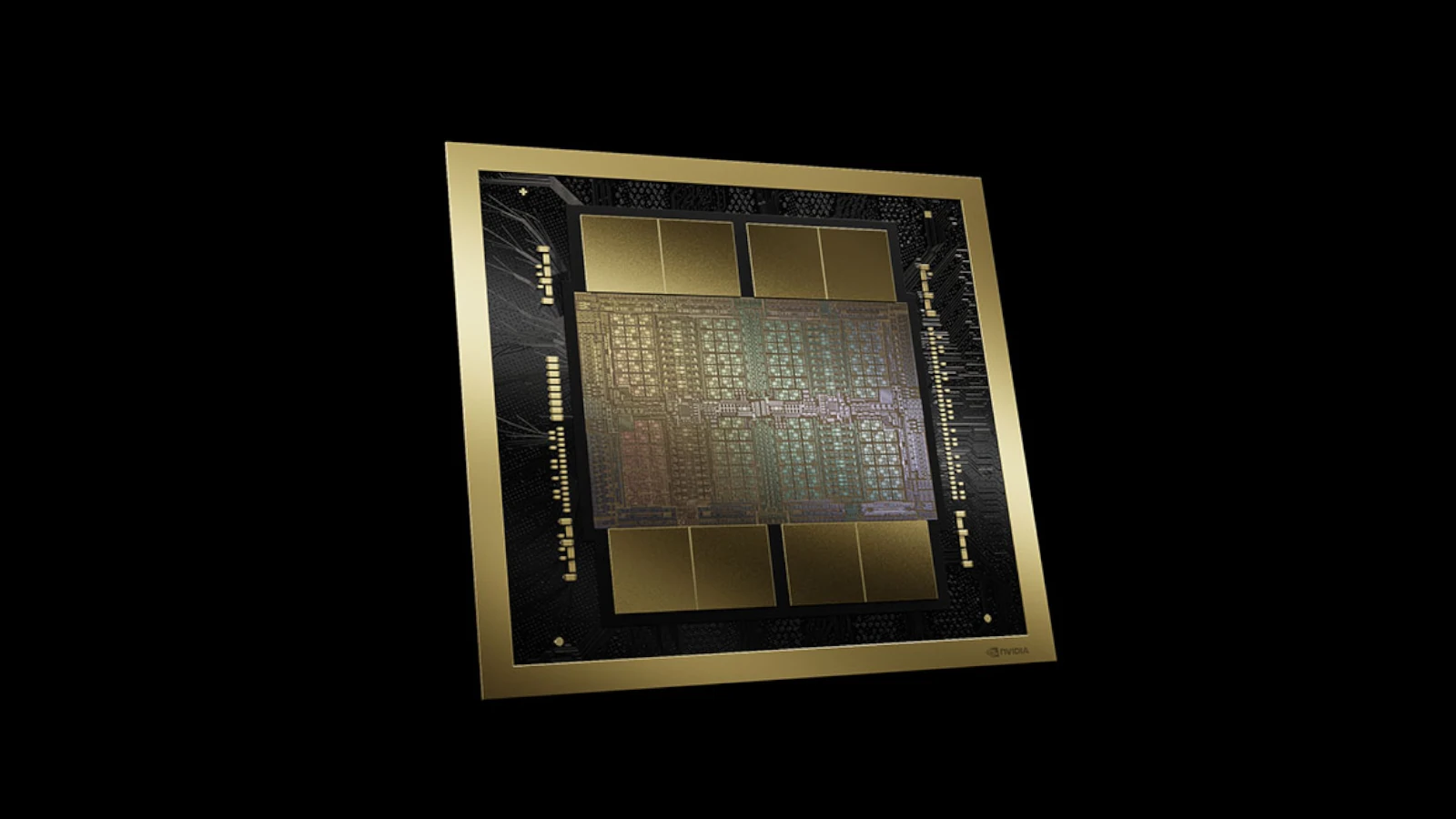

The Rubin Ultra NVL576 platform, unveiled at GTC 2025, showcases NVIDIA’s ambitious vision for AI infrastructure. This system features a massive 576 Rubin GPUs interconnected in specialized Kyber racks, delivering an impressive 100 petaflops of FP4 performance. The platform uses an advanced chip design with four reticle-sized dies per GPU.

NVIDIA’s mockup displays reveal the sheer scale of this technology, with the Kyber racks requiring substantial power infrastructure—reportedly up to 600,000 watts. The Rubin Ultra chips effectively combine two Rubin GPUs connected together, doubling performance capabilities.

This platform isn’t expected to reach the market until late 2027, positioning it as NVIDIA’s future flagship offering for enterprise AI and research applications.

Evolution from Previous Models

The Rubin Ultra marks a clear evolution from NVIDIA’s existing lineup, including the Blackwell Ultra GB300 and Vera Rubin architectures. These previous models established the foundation for NVIDIA’s AI acceleration strategy, with each generation bringing significant performance improvements.

What sets Rubin Ultra apart is its unprecedented scale and integration capabilities. The system introduces HBM4 memory, enhancing bandwidth for complex AI workloads. This represents a substantial leap beyond previous generations in terms of both raw computational power and system architecture.

NVIDIA has developed the Rubin Ultra as part of its long-term roadmap for AI computing infrastructure. The platform shows how the company continues to push boundaries in GPU design, power delivery, and system integration to meet growing demands for AI training and inference capabilities.

Key Features and Advancements

The Rubin Ultra represents a significant leap forward in GPU technology, introducing groundbreaking capabilities that push the boundaries of AI computing. The system combines massive computational power with innovative memory solutions and specialized AI performance enhancements.

Unprecedented Computational Power

The Rubin Ultra delivers an astonishing 100 petaflops of FP4 compute per chip, making it one of the most powerful AI chips ever developed. When scaled up to the NVL576 system configuration, it reaches approximately 5 exaflops of FP8 training performance. This represents a major advancement over previous generation hardware.

Each NVL576 system contains multiple Rubin Ultra GPUs working in tandem with Nvidia’s custom Vera CPUs. The architecture allows for:

- Up to 8 Rubin Ultra GPUs per blade

- 18 blades per pod in Kyber rack configurations

- Total system power requirements approaching 600,000 watts

The computational density achieved enables training of increasingly complex AI models that were previously impossible or impractical to build.

Enhanced Memory Capabilities

Memory enhancements are a cornerstone of the Rubin Ultra’s design, addressing one of the key bottlenecks in AI computing. Each Rubin Ultra chip integrates with advanced HBM4 memory, providing unprecedented bandwidth and capacity.

The memory specifications include:

- 288 gigabytes of memory per chip

- 784GB of unified system memory in the GB300 Blackwell Ultra configuration

- Highly efficient memory architecture optimized for AI workloads

This massive memory footprint allows researchers and companies to work with larger datasets and more complex models without hitting the memory limitations that have constrained previous systems.

AI and Inference Performance

The Rubin Ultra excels particularly in AI inference tasks, where it processes trained models to generate outputs from new inputs. Its FP4 compute capabilities make it especially efficient for inference workloads.

Key performance metrics include:

- 15 exaflops of inference performance

- 20 petaflops of overall AI performance per system

- Built-in 800Gbps Nvidia networking for minimal latency

These specifications enable more responsive AI applications and allow for real-time processing of complex tasks. The integrated Nvidia networking technology ensures that communication between components doesn’t become a bottleneck, maintaining system efficiency even under heavy loads.

The system is expected to launch in the second half of 2027, giving developers time to prepare software optimizations for this new hardware generation.

Technical Specifications

The Rubin Ultra NVL576 represents a significant leap in NVIDIA’s AI computing capabilities with impressive performance metrics and advanced hardware components designed for the most demanding AI workloads.

GPU Architecture and Threads

Rubin Ultra features a revolutionary GPU design with four reticle-sized dies on each GPU chip. This multi-die architecture allows for unprecedented parallel processing capabilities. Each pod houses 18 blades, with each blade supporting up to eight Rubin Ultra GPUs alongside two Vera CPUs for balanced computing.

The platform delivers an astonishing 15 exaflops of FP4 inference and 5 exaflops of FP8 training performance. This represents a 14x improvement over previous generations, making it ideal for complex AI model training and inference.

The processing power comes from the advanced thread architecture, with 176 threads running simultaneously on each processing unit. This massive parallelism enables more efficient workload distribution and faster completion of AI tasks.

Memory Technology

Memory technology sees a dramatic upgrade with Rubin Ultra implementing HBM4e (High Bandwidth Memory) with 1TB capacity per unit. This represents a significant jump from the previous HBM3E memory standard.

The expanded memory capacity addresses one of the most critical bottlenecks in AI computing: data transfer between storage and processing units. With larger on-device memory, more model parameters can remain resident without swapping.

Each Rubin configuration comes in two variants:

- Rubin NVL144: Features two reticle-sized GPUs with 288GB combined memory

- Rubin NVL576: The flagship configuration with full memory complement of 1TB HBM4e

This massive memory bandwidth enables faster model training and more complex AI inference operations without performance degradation.

Connectivity and Bandwidth

The NVL576 rack configuration forms the backbone of Rubin Ultra’s connectivity strategy. This architecture links together 576 Rubin GPUs, creating a unified computing fabric with exceptional communication speeds.

NVLink technology provides the high-speed interconnect between GPU units, allowing for near-seamless data sharing across the entire rack. This minimizes latency when models span multiple GPUs and enables more efficient scaling of workloads.

Each Kyber rack delivers 600,000 watts of power, demonstrating the significant energy requirements of this performance tier. Despite the high power draw, the system achieves remarkable efficiency in terms of computations per watt compared to previous generations.

The entire system relies on optimized bandwidth pathways between memory, processing units, and storage to maintain performance as workloads scale across hundreds of GPUs in production environments.

Use Cases in Data Centers

Rubin Ultra’s NVL576 racks are designed to transform data center operations with groundbreaking AI computing power and efficiency improvements. These systems address the growing demands for more powerful AI infrastructure in modern data centers.

Enhancing AI Computing

The Rubin Ultra NVL576 brings remarkable AI computing capabilities to data centers. With 15 exaflops of FP4 inference and 5 exaflops of FP8 training power, it delivers a 14x performance improvement over previous generations. This massive leap enables data centers to handle increasingly complex AI models.

Each Rubin Ultra contains four reticle-sized GPUs, creating a powerful computing cluster within each rack. This configuration allows data centers to:

- Run larger language models with billions of parameters

- Process real-time inference tasks at unprecedented speeds

- Support multiple concurrent AI workloads without performance degradation

Organizations working with generative AI will particularly benefit from these capabilities, as they can develop and deploy more sophisticated models without the traditional compute limitations.

Improving Data Center Efficiency

Despite its tremendous power, the Rubin Ultra addresses critical efficiency challenges facing modern data centers. The 600kW Kyber racks represent a new approach to power management in high-performance computing environments.

The system’s architecture focuses on:

- Power efficiency: Better performance-per-watt metrics than previous generations

- Space optimization: More computing power in the same physical footprint

- Heat management: Advanced cooling solutions for the 600kW power draw

This efficiency-focused design helps data centers manage the skyrocketing costs associated with generative AI. By consolidating massive computing power into optimized racks, organizations can reduce their overall infrastructure footprint.

The planned 2027 release gives data centers time to prepare their facilities for these next-generation systems, including power delivery upgrades and cooling infrastructure modifications.

Integration and Compatibility

The Rubin Ultra NVL576 system brings unprecedented connectivity options and integration capabilities with existing hardware ecosystems. NVIDIA has designed this platform with versatility in mind to support various computing environments.

Working with AMD and Grace CPUs

The Rubin Ultra NVL576 platform offers flexible integration with both AMD processors and NVIDIA’s own Grace CPUs. This dual-compatibility approach gives data centers more options when building their AI infrastructure.

NVIDIA’s NVLink7 interface plays a key role in this integration, offering six times faster communication than previous versions. This speed boost is crucial when connecting to high-performance CPUs like AMD’s latest server processors or NVIDIA’s Grace chips.

For organizations with existing AMD infrastructure, the transition path is streamlined. The NVL576 racks can connect to AMD systems without requiring complete infrastructure overhauls.

Grace CPU integration is particularly optimized, creating what NVIDIA calls a “superchip” configuration. This pairing delivers enhanced performance for specific AI workloads that benefit from tight CPU-GPU coordination.

Support for Next-Generation AI Models

The Rubin Ultra GPUs are specifically engineered to handle demanding next-generation AI models with billions or even trillions of parameters. The system delivers 15 exaflops of FP4 inference performance and 5 exaflops of FP8 training capability.

These performance metrics represent a 14x improvement over previous generations. This leap enables the development and deployment of more complex AI models that were previously impractical due to hardware limitations.

Key AI model types supported include:

- Large language models (LLMs)

- Vision transformers

- Multimodal AI systems

- Scientific simulations

The platform’s 600kW power capacity ensures sufficient resources for these computation-heavy workloads. NVIDIA has also improved memory capacity and bandwidth to manage the massive datasets these models require.

Market Impact and Future Projections

NVIDIA’s announcement of the Rubin Ultra NVL576 represents a significant leap in GPU technology that will reshape the AI computing landscape. The 600,000-watt Kyber racks signal a new era of power requirements and computing capabilities.

Implications for GPU Technology

The Rubin Ultra NVL576 will connect 576 GPUs using NVIDIA’s seventh-generation NVLink technology. This massive scaling represents a fundamental shift in how AI systems are built and deployed. The 600kW power requirement per rack is nearly 5 times higher than current standards, forcing data centers to completely rethink their power delivery systems.

Companies investing in this technology will need to balance the enormous computing benefits against significant infrastructure costs. The power density challenges may accelerate innovation in cooling technologies and energy efficiency improvements.

These systems will likely support training models that are 10-100x larger than today’s most advanced AI systems. Bandwidth improvements will also be substantial, allowing faster data movement between GPUs.

Forecasts for 2026-2027

Based on NVIDIA’s roadmap, the Rubin Ultra NVL576 is expected to launch in the second half of 2027, following the Vera Rubin NVL144 systems in 2026. This gives data centers approximately two years to prepare their facilities for these power-hungry machines.

Industry analysts predict that only the largest cloud providers and research institutions will initially adopt these systems due to their complexity and cost. However, the competitive advantage they provide for training cutting-edge AI models will likely drive broader adoption.

Market forecasts suggest NVIDIA’s data center revenue could double by 2027, assuming successful deployment of both Vera Rubin and Rubin Ultra systems. The integration with Vera CPUs points to NVIDIA’s strategy of providing complete computing solutions.

Enterprise customers should plan their AI roadmaps with these technology milestones in mind, particularly for applications requiring massive computational resources.

Eco-Conscious Computing

The Rubin Ultra NVL576 system presents significant challenges and opportunities for sustainable computing. NVIDIA has implemented several strategies to balance unprecedented computing power with environmental responsibility.

Energy Efficiency and Power Consumption

The Rubin Ultra NVL576 rack is expected to draw a massive 600kW of power, making it one of the most power-hungry computing systems ever created. This enormous power requirement has prompted NVIDIA to develop the specialized “Kyber Rack” with advanced liquid cooling technology to manage heat efficiently.

Despite its high power draw, NVIDIA has focused on performance-per-watt improvements. The system delivers 15 exaflops of FP4 inference and 5 exaflops of FP8 training—approximately 14 times more powerful than previous generations while not scaling power requirements proportionally.

The liquid cooling solution isn’t just about managing heat—it’s a critical eco-conscious design choice. By using liquid instead of traditional air cooling, the system can operate more efficiently, reducing the overall energy needed for cooling infrastructure.

NVIDIA is also working with data center partners to explore renewable energy options that could offset the carbon footprint of these high-performance systems. Some facilities are investigating on-site solar and wind generation specifically sized to support these power-dense racks.

Frequently Asked Questions

NVIDIA’s Rubin Ultra represents a major leap in AI computing power with its NVL576 rack system. These new GPUs offer unprecedented performance and will shape the future of AI infrastructure.

What are the specifications of the Rubin Ultra chip introduced by NVIDIA?

The Rubin Ultra chip features two Rubin GPUs connected within a single chip package. This design delivers 100 petaflops of FP4 performance, which is twice the processing power of the standard Rubin GPU.

The chip will use HBM4 memory, providing faster data access and processing capabilities. NVIDIA plans to release Rubin Ultra in the second half of 2027.

NVIDIA’s NVL576 rack configuration will connect 576 Rubin GPUs together, creating a massive computational system requiring up to 600,000 watts of power.

How does the Rubin Ultra GPU performance compare to previous NVIDIA generations?

Rubin Ultra represents a significant performance jump over previous NVIDIA architectures. With 100 petaflops of FP4 performance, it doubles the capabilities of the standard Rubin GPU.

This advancement follows NVIDIA’s pattern of generational improvements, building upon the Blackwell architecture. Each new generation has expanded AI computing capabilities substantially.

The shift to HBM4 memory and the dual-GPU design in a single package helps Rubin Ultra achieve these performance gains.

What advancements has NVIDIA made in NVENC technology with the Rubin Ultra?

NVIDIA has improved their NVENC (NVIDIA Encoder) technology with the Rubin Ultra, offering faster video encoding and decoding. These enhancements support increasingly demanding AI video processing workloads.

The updated NVENC capabilities work alongside the increased computational power to handle complex media applications. This makes Rubin Ultra particularly well-suited for video-intensive AI tasks.

These improvements build on NVIDIA’s long history of optimizing hardware for media processing.

Which applications are best optimized for the NVIDIA Rubin Ultra architecture?

Large language models and generative AI applications will benefit most from Rubin Ultra’s massive processing power. These applications require the extensive computational resources that Rubin Ultra provides.

Scientific computing and data analytics programs will also see significant performance gains. The architecture supports both traditional high-performance computing and newer AI workloads.

Video processing, rendering, and simulation applications will leverage Rubin Ultra’s improved NVENC capabilities and raw processing power.

In what ways does the NVIDIA Rubin Ultra enhance AI and deep learning computations?

Rubin Ultra’s 100 petaflops of FP4 performance dramatically speeds up AI model training times. This allows researchers to work with larger, more complex models than previously possible.

The architecture’s memory improvements address one of the key bottlenecks in AI computing. Better memory bandwidth and capacity enable handling larger datasets more efficiently.

NVIDIA’s software ecosystem is optimized to take full advantage of Rubin Ultra’s capabilities, ensuring AI frameworks can utilize the hardware effectively.

Can you detail the compatibility of NVIDIA Rubin Ultra with existing DGX system infrastructure?

The Rubin Ultra will use the new NVL576 rack system, representing a significant infrastructure change. Organizations may need to upgrade their power and cooling systems to accommodate the 600,000-watt requirements.

NVIDIA typically ensures software compatibility across generations while encouraging hardware upgrades. This approach allows for easier transitions when adopting new GPU technologies.

The new Kyber racks designed for Rubin Ultra suggest specialized infrastructure requirements beyond current DGX systems, though NVIDIA usually provides migration paths for existing customers.