At Computex 2025, NVIDIA made headlines with the introduction of two transformative technologies: the Blackwell B300 GPU and the NVLink Fusion platform. These innovations are not just upgrades—they represent a bold pivot in NVIDIA’s long-term AI strategy, aiming to redefine how AI data centers are built and scaled.

B300 GPU: The Heart of Agentic AI

The newly announced B300 GPU, built on NVIDIA’s Blackwell architecture, is engineered for next-generation AI workloads, including large language models, multimodal inference, robotics, and physical simulations. It’s the direct successor to the B200, but offers dramatic gains:

| Feature | B300 vs Previous Generation |

|---|---|

| Inference Performance on LLMs | Up to 11x faster |

| Compute Throughput | 7x more compute |

| Memory Capacity | 4x larger memory pool |

| Performance in Rack Configurations | 1.5x better than GB200 NVL72 |

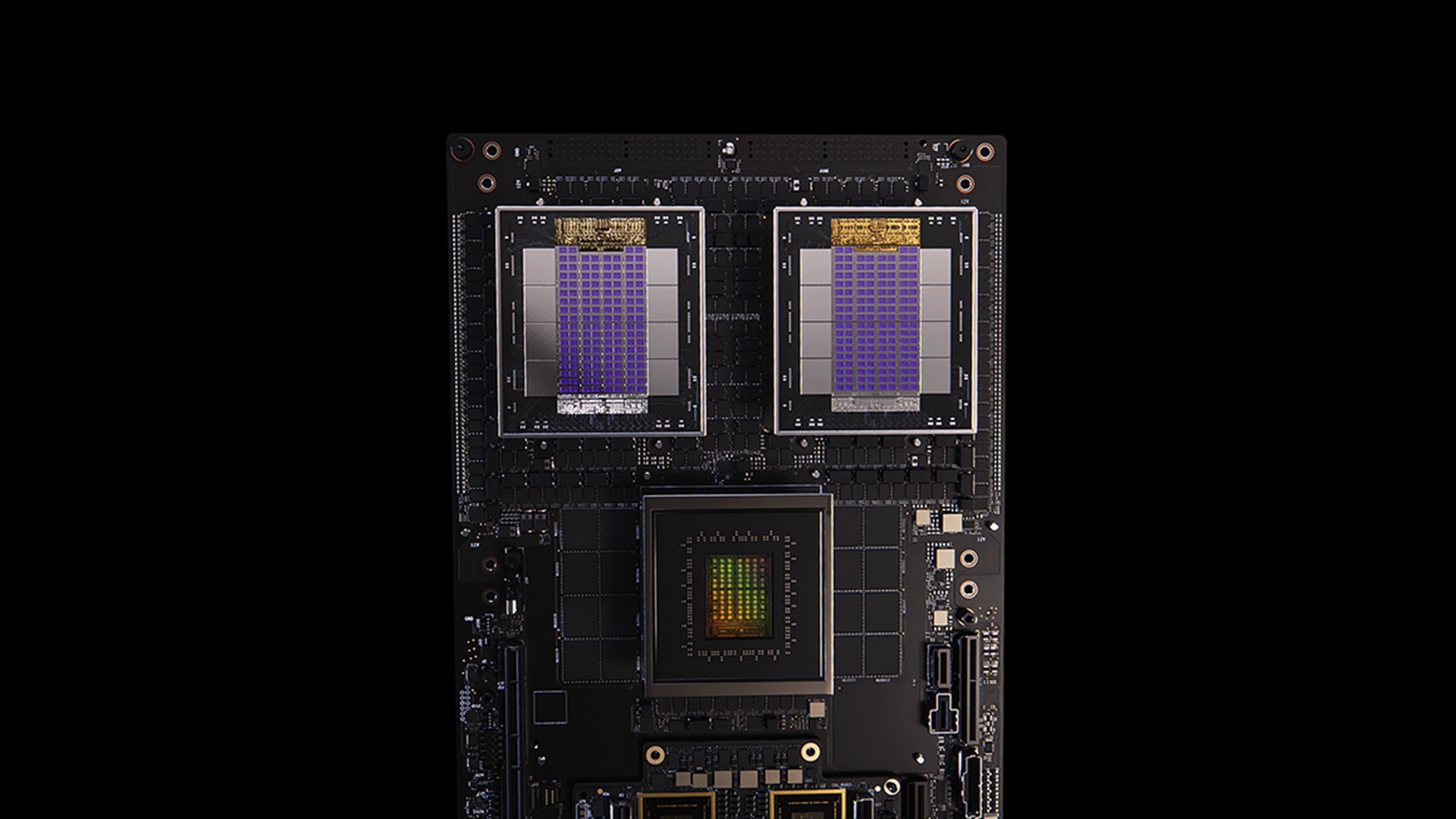

The HGX B300 NVL16 and GB300 NVL72 server platforms integrate the B300 with NVIDIA Grace CPUs, connected via high-bandwidth NVLink and NVSwitch. The GB300 NVL72 alone features 72 Blackwell Ultra GPUs and 36 Grace CPUs in a liquid-cooled rack. These systems are purpose-built for agentic AI—a term NVIDIA uses to describe AI systems that can perceive, reason, and act autonomously in real-world environments.

This positions the B300 not just as a performance leap, but as the foundation for future AI models that go beyond text and images into robotics, digital twins, and self-adaptive systems.

NVLink Fusion: Custom AI Platforms, Now with NVIDIA Inside

Perhaps even more disruptive is NVLink Fusion, a new initiative allowing third-party silicon vendors and system builders to integrate their CPUs and accelerators directly with NVIDIA’s GPU ecosystem.

Traditionally, NVLink has been a proprietary interconnect limited to NVIDIA components. With NVLink Fusion, NVIDIA is tearing down those walls.

Key features of NVLink Fusion include:

- 14x the bandwidth of PCIe Gen5, eliminating data bottlenecks between accelerators.

- Support for custom server architectures, letting companies build semi-custom data centers with unmatched performance and flexibility.

- Compatibility with CPUs and accelerators from major partners, including Qualcomm, Fujitsu, MediaTek, Marvell, Alchip, and Astera Labs.

This strategic move opens the door to a wider array of AI workloads and cloud applications, especially for providers who want performance at scale without being locked into a single vendor’s architecture.

Strategic Shift: From Closed Ecosystem to Modular AI

With NVLink Fusion, NVIDIA is acknowledging a fundamental truth of modern AI infrastructure: customization is king. Hyperscalers, enterprise customers, and even government agencies increasingly demand tailored solutions for their AI deployments.

By enabling a broader hardware ecosystem, NVIDIA is:

- Expanding its market reach without losing control of its GPU stack.

- Building an ecosystem moat, making it harder for competitors like AMD, Intel, or even custom AI chip startups to displace them.

- Future-proofing its relevance, as the AI space shifts toward disaggregated and modular architectures.

In short, NVIDIA isn’t just building faster GPUs—it’s positioning itself as the foundation of the entire AI infrastructure layer, regardless of which CPU or accelerator you choose.

What This Means Going Forward

With B300 and NVLink Fusion, NVIDIA is delivering both raw performance and architectural freedom. The announcements underscore the company’s mission to stay ahead of the curve as AI evolves beyond LLMs into more immersive, autonomous, and physical applications.

Computex 2025 wasn’t just a product launch—it was a declaration: NVIDIA intends to lead the next phase of the AI revolution, not just with silicon, but with a scalable, customizable ecosystem for every player in the game.

Key Takeaways

- NVLink Fusion allows customers to use non-Nvidia CPUs while keeping compatibility with Nvidia’s AI ecosystem.

- Major tech partners including MediaTek and Marvell are already offering design services through the NVLink Fusion program.

- These announcements strengthen Nvidia’s position as a central player in AI hardware development while offering more customization options.

Nvidia’s B300 and NVLink Fusion Announcements at Computex

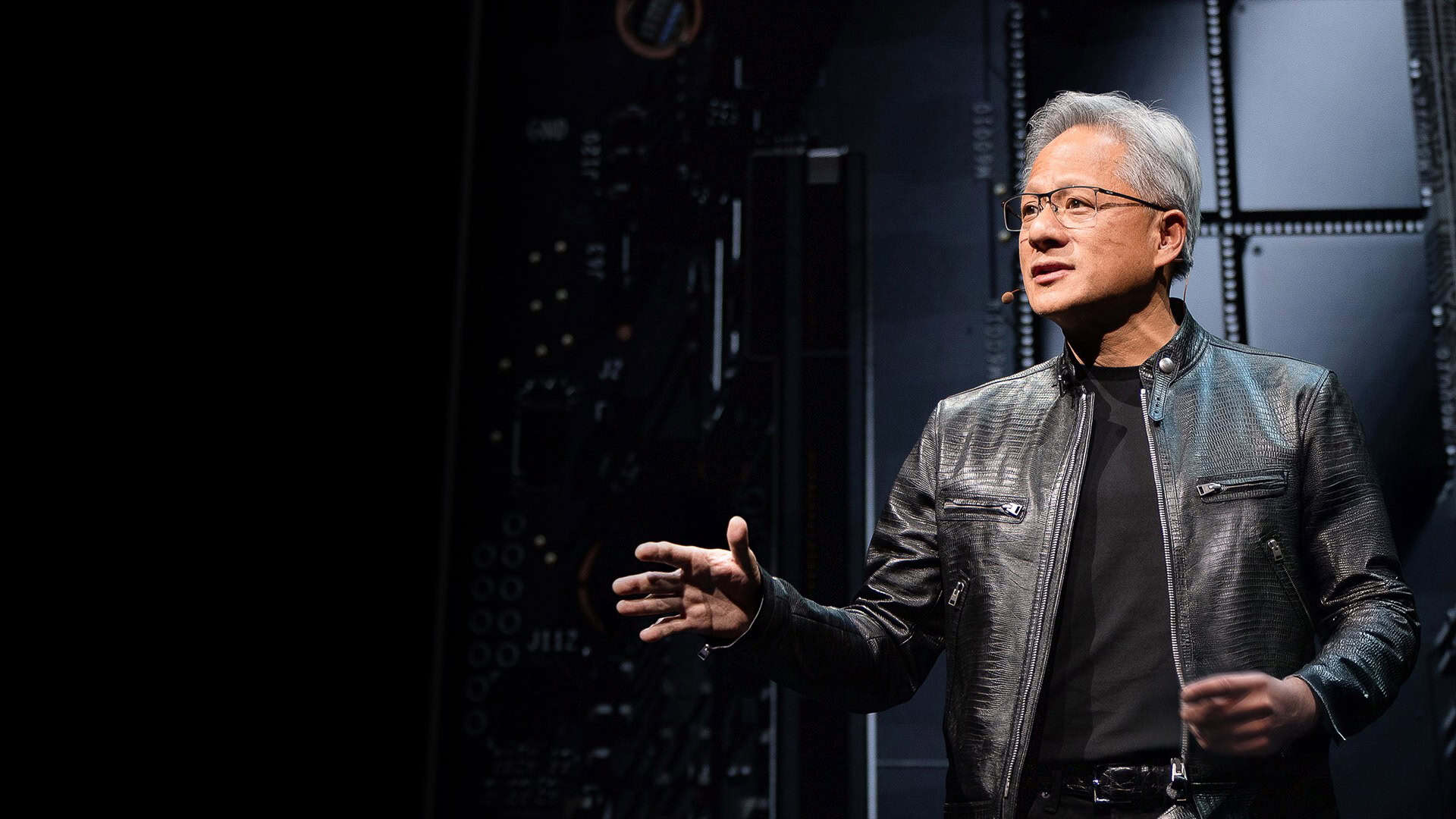

Nvidia made significant hardware and technology announcements at Computex 2025, showcasing their latest AI innovations to maintain market leadership. CEO Jensen Huang presented these advancements during his keynote at the Taipei Music Center.

Overview of B300

The B300, part of Nvidia’s Blackwell architecture, represents a major leap in AI accelerator technology. This chip contains 18 cores designed specifically for demanding AI workloads and large language model training.

B300 builds on previous generations with significantly improved performance metrics for both training and inference tasks. The architecture allows for better handling of complex AI models while using less power than previous generations.

Companies working with large datasets and demanding AI applications will find the B300 particularly valuable. Its improved efficiency helps reduce the massive energy costs typically associated with AI infrastructure.

The chip works seamlessly with Nvidia’s software stack, including Nvidia AI Enterprise, making it easier for organizations to deploy and manage AI workloads at scale.

NVLink Fusion Technology Explained

NVLink Fusion opens Nvidia’s AI platform to partners for building specialized AI infrastructures. This program allows customers to use Nvidia’s key NVLink technology for their own custom rack-scale designs, even with non-Nvidia components.

NVLink itself is a high-speed interconnect technology that enables fast communication between chips. The technology is crucial for:

- Connecting GPUs to each other

- Linking GPUs to CPUs (like Nvidia’s Grace 72-core ARM processor)

- Creating efficient, powerful computing clusters

By opening this technology to others, Nvidia enables companies to create semi-custom AI systems that maintain compatibility with Nvidia’s ecosystem while incorporating specialized hardware for specific needs.

Significance for AI Infrastructure

The B300 and NVLink Fusion represent a strategic shift in how AI systems can be built and deployed. Together, they address two critical challenges: raw computing power and system flexibility.

For major cloud providers and AI research labs, these technologies enable building more powerful and efficient systems. The open approach with NVLink Fusion means companies can integrate specialized ASICs or other processors while maintaining compatibility with Nvidia’s software.

This approach helps organizations avoid vendor lock-in while still benefiting from Nvidia’s extensive AI software ecosystem.

The economic impact is substantial, potentially reducing costs for developing custom AI infrastructure. Companies can now blend the best technologies from different vendors without sacrificing system performance or software compatibility.

These announcements firmly position Nvidia to maintain its leadership in AI hardware while adapting to a market that increasingly demands customization and flexibility.

Frequently Asked Questions

NVIDIA’s announcements at Computex have introduced significant innovations with the B300 series graphics cards and NVLink Fusion technology, reshaping AI infrastructure and capabilities.

What are the key features and improvements of the NVIDIA B300 series graphics cards?

The B300 series represents NVIDIA’s latest advancement in AI-focused GPU technology. These cards feature improved computational cores designed specifically for machine learning workloads.

The new series includes significantly expanded memory capacity and bandwidth, enabling more complex AI models to run efficiently. Memory improvements allow developers to work with larger datasets without performance bottlenecks.

Power efficiency has also been enhanced, with the B300 series offering better performance-per-watt metrics compared to previous generations. This helps data centers reduce operational costs while increasing computing power.

How does NVLink Fusion technology enhance the performance and capabilities of NVIDIA products?

NVLink Fusion allows industries to build semi-custom AI infrastructure combining NVIDIA GPUs with non-NVIDIA CPUs. This flexibility helps companies create specialized systems tailored to their specific needs.

The technology enables partners like Fujitsu and Qualcomm to couple their custom CPUs with NVIDIA GPUs in rack-scale designs. This integration creates more efficient pathways for data movement between components.

NVLink Fusion serves as a bridge technology that maintains NVIDIA’s central role in AI development while allowing greater ecosystem diversity. It represents a strategic move to keep NVIDIA’s technology at the heart of custom AI solutions.

What are the potential applications and use cases for the B300 series and NVLink Fusion?

Large-scale AI training facilities will benefit from the B300’s improved performance for developing more complex machine learning models. These “AI factories” can process enormous datasets more efficiently.

NVLink Fusion opens possibilities for custom AI solutions in specialized fields like healthcare, automotive, and scientific research. Organizations can build purpose-specific systems with optimal CPU-GPU combinations.

Cloud service providers can use these technologies to offer more diverse and powerful AI computing options to their customers. This flexibility allows for more tailored solutions based on specific workload requirements.

Can you compare the performance specifications of the B300 series against previous NVIDIA graphics cards?

The B300 series delivers substantial performance gains over previous generations, with improved AI training and inference speeds. Early benchmarks suggest significant improvements in operations per second.

Memory bandwidth has been increased, addressing a common bottleneck in AI workloads. This enhancement allows for faster data access during complex calculations.

Energy efficiency has also improved, with the B300 providing more computation per watt than earlier models. This advancement is crucial for data centers looking to maximize performance while minimizing power consumption.

What is the anticipated impact of the B300 series and NVLink Fusion on the graphics card market?

The introduction of these technologies will likely strengthen NVIDIA’s position in the AI hardware market. The company continues to lead innovation in GPU computing for machine learning applications.

Competitors may face challenges as NVIDIA extends its ecosystem while simultaneously opening it to custom integrations. This strategy combines technological advantage with partnership flexibility.

Market adoption is expected to be strong among major tech companies building or expanding AI infrastructure. Early adopters will gain computational advantages for their AI development initiatives.

How will the introduction of the B300 series and NVLink Fusion affect existing NVIDIA technologies?

Current NVIDIA products will remain viable but may see accelerated replacement cycles as companies seek the advantages of newer technology. The transition will likely be gradual based on specific needs and budgets.

Software compatibility across generations should remain strong, with NVIDIA’s CUDA platform continuing to support both new and existing hardware. This ensures continued value for current investments.

The expansion of NVLink capabilities may lead to more diverse system architectures incorporating NVIDIA GPUs. This could create new opportunities for system integrators and hardware manufacturers working within the NVIDIA ecosystem.